Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes live video seminars, enchanting renewal songs and a thousand page illustrated codex.

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes

live video seminars, enchanting renewal songs and a thousand page

illustrated codex.

Return

to Genesis of Eden?

Return

to Genesis of Eden?

"STOP! Show your classical apparatus! Delineating the

boundary between the quantum and

classical worlds is one of the unresolved problems of physics.

Decoherence and the Transition from Quantum to Classical

Wojciech Zurek Physics Today Oct 91 (abridged)

Quantum mechanics works exceedingly well in all practical applications. No example of conflict between its predictions and experiment is known. Without quantum physics we could not explain the behavior of solids, the structure and function of DNA, the color of the stars, the action of lasem or the properties of superfluids. Yet well over half a century after its inception, the debate about the relation of quantum mechanics to the familiar physical world continues. How can a theory that can account with precision for everything we can measure still be deemed lacking.?

What is wrong with quantum theory?

The only "failure" of quantum theory is its inability to provide

a natural framework that can accommodate our prejudices about the workings

of the universe. States of quantum systems evolve according to the deterministic,

linear Schrödinger equation,![]()

That is, just as in classical mechanics, given the initial state of the

system and its Hamiltonian H, one can compute the state at an arbitrary

time. This deterministic evolution of ![]() has been verified in carefully controlled

experiments. Moreover, there is no indication of a border between quantum

and classical behavior at which equation 1 fails. There is, however, a very

poorly controlled experiment with results so tangible and immediate that

it has an enormous power to convince: Our perceptions are often difficult

to reconcile with the predictions of equation 1.

has been verified in carefully controlled

experiments. Moreover, there is no indication of a border between quantum

and classical behavior at which equation 1 fails. There is, however, a very

poorly controlled experiment with results so tangible and immediate that

it has an enormous power to convince: Our perceptions are often difficult

to reconcile with the predictions of equation 1.

Why? Given almost any initial condition the universe described by ![]() evolves

into a state that simultaneously contains many alternatives never seen to

coexist in our world. Moreover, while the ultimate evidence for the choice

of one such option resides in our elusive "consciousness," there

is every indication that the choice occurs long before consciousness ever

gets involved. Thus at the root of our unease with quantum mechanics is

the clash between the principle of superposition-the consequence of the

linearity of equation 1-and the everyday classical reality in which this

principle appears to be violated. The problem of measurement has a long

and fascinating history. The first widely accepted explanation of how a

single outcome emerges from the many possibilities was the Copenhagen interpretation,

proposed by Niels Bohr, who insisted that a classical apparatus is necessary

to carry out measurements. Thus quantum theory was not to be universal.

The key feature of the Copenhagen interpretation is the dividing line between

quantum and classical. Bohr emphasized that the border must be mobile, so

that even the "ultimate apparatus"-the human nervous system-can

be measured and analyzed as a quantum object, provided that a suitable classical

device is available to carry out the task. In the absence of a crisp criterion

to distinguish between quantum and classical, an identifir-ation of the

"classical" with the "macroscopic" has often been tentatively

accepted. The inadequacy of this approach has become apparent as a result

of relatively recent developments: A cryogenic version of the Weber bar-a

gravity wave detector-must be treated as a quantum harmonic oscillator even

though it can weigh a ton.' Nonclassical squeezed states can describe oscillations

of suitably prepared electromagnetic fields with macroscopic numbers of

photons. Superconducting Josephson junctions have quantum states associated

with currents involving macroscopic numbers of electrons, and yet they can

tunnel between the minima of the effective potential.'

evolves

into a state that simultaneously contains many alternatives never seen to

coexist in our world. Moreover, while the ultimate evidence for the choice

of one such option resides in our elusive "consciousness," there

is every indication that the choice occurs long before consciousness ever

gets involved. Thus at the root of our unease with quantum mechanics is

the clash between the principle of superposition-the consequence of the

linearity of equation 1-and the everyday classical reality in which this

principle appears to be violated. The problem of measurement has a long

and fascinating history. The first widely accepted explanation of how a

single outcome emerges from the many possibilities was the Copenhagen interpretation,

proposed by Niels Bohr, who insisted that a classical apparatus is necessary

to carry out measurements. Thus quantum theory was not to be universal.

The key feature of the Copenhagen interpretation is the dividing line between

quantum and classical. Bohr emphasized that the border must be mobile, so

that even the "ultimate apparatus"-the human nervous system-can

be measured and analyzed as a quantum object, provided that a suitable classical

device is available to carry out the task. In the absence of a crisp criterion

to distinguish between quantum and classical, an identifir-ation of the

"classical" with the "macroscopic" has often been tentatively

accepted. The inadequacy of this approach has become apparent as a result

of relatively recent developments: A cryogenic version of the Weber bar-a

gravity wave detector-must be treated as a quantum harmonic oscillator even

though it can weigh a ton.' Nonclassical squeezed states can describe oscillations

of suitably prepared electromagnetic fields with macroscopic numbers of

photons. Superconducting Josephson junctions have quantum states associated

with currents involving macroscopic numbers of electrons, and yet they can

tunnel between the minima of the effective potential.'

If macroscopic systems cannot always be safely placed on the classical side of the boundary, might there be no boundary at all? The many-worlds interpretation (or, more accurately, the many-universes interpretation) claims to do away with the boundary.' The many-worlds interpretation was developed in the 1950s by Hugh Everett III with the encouragement of John Archibald Wheeler. In this interpretation all of the universe is described by quantum theory. Superpositions evolve forever according to the Schrödinger equation. Each time a suitable interaction takes place between any two quantum systems, the wavefunction of the universe splits, so that it develops ever more "branches." Everett's work was initially almost unnoticed. It was taken out of mothballs over a decade later by Bryce DeWitt. The many-worlds interpretation is a natural choice for quantum cosmology, which describes the whole universe by means of a state vector. There is nothing more macroscopic than the universe. It can have no a priori classical subsystems. There can be no observer "on the outside." In this context, classicality has to be an emergent property of ne selected observables or systems.

At a first glance, the two interpretations - many-worlds and Copenhagen-have little in common. The Copenhagen interpretation demands an a priori "classical domain" with a border that enforces a classical "embargo" by letting through just one potential outcome. The many-worlds interpretation aims to abolish the need for the border altogether: Every potential outcome is accommodated by the ever proliferating branches of the wavefunction of the universe. The similarity of the difficulties faced by these two viewpoints nevertheless becomes apparent when we ask the obvious question "Why do I, the observer, perceive only one of the outcomes?" Quantum theory, with its freedom to rotate bases in Hilbert space, does not even clearly define which states of the universe correspond to branches. Yet our perception of a reality with alternatives and not a coherent superposition of alternatives demands an explanation of when, where and how it is decided what the observer actually perceives. Considered in this context, the many-worlds interpretation in its original version does not abolish the border but pushes it all the way to the boundary between the physical universe and consciousness. Needless to say, this is a very uncomfortable place to do physics.

In spite of the profound difficulties and the lack of a breakthrough for some time, recent years have seen a growing consensus that progress is being made in dealing with the measurement problem. The key (and uncontroversial) fact has been known almost since the inception of quantum theory, but its significance for the transition from quantum to classical is being recognized only now: Macroscopic quantum systems are never isolated from their environments. Therefore, as H. Dieter Zeh emphasized,' they should not be expected to follow Schrödinger's equation, which is applicable only to a closed system. As a result systems usually regarded as classical suffer (or benefit) from the natural loss of quantum coherence, which "leaks out" into the environment.' The resulting "decoherence" cannot be ignored when one addresses the problem of the reduction of wavepackets: It imposes in effect, the required embargo on the potential outcomes, only allowing the observer to maintain records of alternatives and to be aware of only one branch.

Correlations and Measurements

(This section gives an abbreviated paraphrase of Zurek's argument)

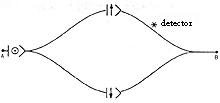

Supposing we consider a measurement of electron spin made in a Stern-Gerlach magnet - an asymmetrical magnetic field created by a pointed and rounded pair of magnets that differentially deflects electrons or atoms of opposite spins.

The above diagram shows deflection of an electron or atom depending on

its spin. Unlike Bohr's classical measurement apparatus, von Neumann assumed

the measurement apparatus could be of a quantum nature. If a detector is

placed in the spin up path, it will than click only if the electron is spin

up so we can assume the undetermined detector state dn is equivalent to

spin down. In any event if we start with an electron in the pure state ![]() then

the composite system can be described as

then

the composite system can be described as ![]() and the detector system will

evolve into a correlated state:

and the detector system will

evolve into a correlated state:

![]()

This correlated state involves two branches of the detector, one in which it (actively in our case) measures spin up and the other (passively) spin down. This is precisely the splitting of the wave function into two branches advanced by Everett to articulate the many-worlds description of quantum mechanics.

However in the real world, we know the alternatives are distinct outcomes rather than a mere superposition of states. Even if we do not know what the outcomes are we can safely assume that one of them will have actually occurred and when we do experience the result it is one or another outcome, not simply a superposition of outcomes. Everett got around this by pointing out that the observer (consider d) cannot detect the splitting since in either branch a consistent result is registered. Von Neumann was well aware of these difficulties and postulated that in addition to the unitary evolution of the wave function there is a non-unitary 'reduction of the state vector or wve function which converts the superposition into a mixture by cancelling the correlating off-diagonal terms of the pure density matrix:

![]()

to get a reduced density matrix:![]()

The key advantage of this interpretation is that it enables us to interpret the coefficients as classical probabilities. However, as we have seen with the EPR pair-splitting experiments, the quantum system has not made any decisions about its nature until measurement has taken place. This explains the off-diagonal terms which are essential to maintain the fully undetermined state of the quantum system which has not yet even decided whether the electrons are spin up or spin down or perhaps instead are right or left or some other basis combination altogether.

One way to explain how this additional information is disposed of is to include the interaction of the system with the environment in other ways. Consider a system S detector D and environment E. If the environment canalso interact and become correlated with the apparatus, we have the following transition:

![]()

This final state extends the correlation beyond the system-detector pair. When the states of the environment corresponding to the spin up and spin down states of the detector are orthogonal, we can take the trace over the uncontrolled degrees of freedom to get the same results as the reduced matrix. Essentially whenever the observable is a constant of motion of the detector-environment Hamiltonian, the observable will be reduced from a superposition to a mixture. In practice, the interaction of the particle carrying the quantum spin states with a photon and the large number of degrees of freedom of the open environment can make this loss of coherence irreversible.

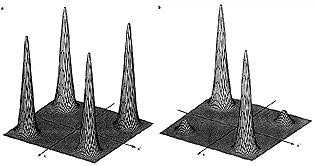

For large classical objects decoherence would be virtually instantaneous because of the high probability of interaction of such a system with some environmental quantum. Zurek then constructs a quantitative model to illustrate the bulk effects of decoherence over time.

The gradual cancellation of the off-diagonal elements with decoherence

Decoherence, histories, and the universe

The universe is, of course, a closed system. As far as quantum phase information is concerned, it is practically the only system that is effectively closed. Of course, an observer inhabiting a quantum universe can monitor only very few observables, and decoherence can arise when the unobserved degrees of freedom are "traced over."" A more fundamental issue, however, is that of the emergence of the effective classicality as a feature of the universe that is more or less independent of special observers, or of coarse grainings such as a fixed separation of the universe into observed and unobserved systems. The quantum mechanics of the universe must allow for possible large quantum fluctuations of space-time geometry at certain epochs and scales. In particular, it may include important effects of quantum gravity early in the expansion of the universe. Nontrivial issues such as the emergence of the usual notion of time in quantum mechanics must then be addressed. Here we shall neglect such considerations and simply treat the universe as a closed system with a simple initial condition. Significant progress in the study of decoherence in this context has been reported by Murray Gell-Mann and James B. Hartle," who are pursuing a program suitable for quantum cosmology that may be called the manyhistories interpretation. The many-histories interpretation builds on the foundation of Everett's many-worlds interpretation, but with the addition of three crucial ingredients: the notion of sets of alternative coarsegrained histories of a quantum system, the decoherence of the histories in a set, and their approximate determinism near the effectively classical limit. A set of coarse-grained alternatives for a quantum system at a given time can be represented by a set of mutually exclusive projection operators, each corresponding to a different range of values for some properties of the system at that time. (A completely fine-grained set of alternatives would be a complete set of commuting operators.) An exhaustive set of mutually exclusive coarse-grained alternative histories can be obtained, each one represented by a time-ordered sequence of such projection operators.

The definition of consistent histories for a closed quantum system was first proposed by Robert Griffiths. He demonstrated that when the sequences of projection operators satisfy a certain condition (the vanishing of the real part of every interference term between sequences), the histories characterized by these sequences can be assigned classical probabilities-in other words, the probabilities of alternative histories can be added. Griffiths's idea was further extended by Roland Omnes who developed the "logical interpretation" of quantum mechanics by demonstrating how the rules of ordinary logic can be recovered when making statements about properties that satisfy the Griffiths criterion.

Recently Gell-Mann and Hartle pointed out that in practice somewhat stronger conditions than Griffiths's tend to hold whenever histories decohere. The strongest condition is connected with the idea of records and the crucial fact that noncommuting projection operators in a historical sequence can be registered through commuting operators designating records. They defined a decoherence functional in terms of which the Griffiths criterion and the stronger versions of decoherence are easily stated.

Given the initial state of the universe (perhaps a mixed state) and the time evolution dictated by the quantum field theory of all the elementary particles and their interactions, one can in principle predict probabilities for any set of alternative decohering ed histories of the universe. Gell-Mann and Hartle raise the question of which sets exhibit the classicality of familiar experience. Decoherence is a precondition for such classicality; the remaining criterion, approximate determinism, is not yet defined with precision and generality. Within the many-histories program, one is studying the stringent requirements put on the coarseness of histories by their classicality. Besides the familiar and comparativelv trinial indeterminacy imposed bv the uncertainty principle, there is the further coarse graining required for decoherence of histories. Still further coarseness-for example, that encountered in following hydrodynamic variables averaged over macroscopic scales-can supply the high inertia that resists the noise associated with the mechanics of decoherence and so permits decohering histories to exhibit approximate predictability. Thus the effectively classical domain through which quantum mechanics can be perceived necessarily involves a much greater indeterminacy than is generally attributed to the quantum character of natural phenomena.

Quantum theory of classical reality

We have seen how classical reality emerges from the substrate of quantum physics: Open quantum systems are forced into states described by localized wavepackets. These essentially classical states obey classical equations of motion, although with damping and fluctuations of possibly quantum origin. What else is there to explain? The origin of the question about the interpretation of quantum physics can be traced to the clash between predictions of the Schr6dinger equation and our perceptions. It is therefore useful to conclude this paper bv revisiting the source of the problem-our awareness of definite outcomes. If the mental processes that produce this awareness were essentially unphysical, there would be no hope of addressing the ultimate question-why do we perceive just one of the quantum altematives?-within the context of phvsics. Indeed, one might be tempted to follow Eugene Wigner in giving consciousness the last word in collapsing the state vector .

I shall assume the opposite. That is, I shall examine the idea that the higher mental processes adl correspond to well-defined but, at present, poorly understood information processing functions that are carried out by physical systems, our brains. Described in this manner, awareness becomes susceptible to physical analysis. In particular, the process of decoherence is bound to affect the states of the brain: Relevant observables of individual neurons, inc!uding chemical concentrations and electrical potentials, are macroscopic. They obey classical, dissipative equations of motion. Thus any quantum superposition of the states of neurons will be destroyed far too quickly for us to become conscious of quantum goings-on: Decoherence applies to our own "state of mind." One might still ask why the Preferred basis of neurons becomes correlated with the classical observables in the familiar universe. The selection of available interaction Hamiltonians is limited and must constrain the choices of the detectable observables. There is, however, another process that must have played a decisive role: Our senses did not evolve for the purpose of verifying quantum mechanics. Rather, they developed through a process in -hich survival of the fittest played a central role. And when nothing can be gained from prediction, there is no evolutionary reason for perception. Moreover, only classi cal States are robust in spite of decoherence and therefore have predictable consequences. Hence one might argue that we had to evolve to perceive classical reality. There is little doubt that the process of decoherence sketched in this paper is an important fragment central to the understanding of the big picture-the transition from quantum to classical: Decoherence destroys superposi tiOnsThe environment induces, in effect, a superselec tion rule that prevents certain superpositions from being observed. Only states that survive this process can become classical.

There is even less doubt that the rough outline of this big picture will be further extended. Much work needs to be done both on technical issues (such as studying more re alistic models that could lead to experiments) and on issues that require new conceptual input (such as defining what constitutes a "system" or answering the question of how an observer fits into the big picture). Decoherence is of use within the framework of either of the two major interpretations: It can supply a definition of the branches in Everett's many-worlds interpretation, but it can also delineate the border that is so central to Bohr's point of view. And if there is one lesson to be learned from what we already know about such matters, it is undoubtedly the key role played by information and its transfer in the quantum universe. The natural sciences were built on a tacit assumption: Information about a physical system can be acquired without influencing the system's state. Until recently, information was regarded as unphysical, a mere record of the tangible, material universe, existing beyond and essentially decoupled from the domain governed by the laws of physics. This view is no longer tenable. Quantum theory has helped to put an end to such Laplacean dreams of a mechanical universe. The dividing line between what is and what is known to be has been blurred forever. Conscious observers have lost their monopoly on acquiring and storing information. The environment can also monitor a system, and the only difference from a man-made apparatus is that the records maintained by the environment are nearly impossible to decipher. Nevertheless, such monitoring causes decoherence, which allows the familiar approximation known as classical objective reality-a perception of a selected subset of all conceivable quantum states evolving in a largely predictable manner-to emerge from the quantum substrate.