Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes live video seminars, enchanting renewal songs and a thousand page illustrated codex.

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes

live video seminars, enchanting renewal songs and a thousand page

illustrated codex.

Return

to Genesis of Eden?

Return

to Genesis of Eden?

Quantum Consciousness

Quantum Consciousness

Polymath Roger Penrose takes on the ultimate mystery

John Horgan Scientific American Nov 89

The Emperor's New Mind: Concerning

Minds and the Laws of Physics,

Roger Penrose Oxford Uriversity Press, 1989.

Shadows of the Mind: A Searc for the

Missing Science of Consciousness

Roger Penrose, Oxford Uriversity Press, 1994.

Roger Penrose is slight in figure and gentle in mien, and he is an Roddly diffident chauffeur for a man who has just proposed how the entire universe-including the enigma of human consciousness-might work. Navigating from the airport outside Syracuse, N.Y., to the city's university, he brakes at nearly every crossroad, squinting at signs as if they bore alien runes. Which way is the right way? he wonders, apologizing to me for Ins indecision. He seems mired in mysteries. when we finally reach his office, Penrose finds a can labeled "Superstring" on a table. He chuckles. On the subject of superstrings-not the filaments of foam squirted from this novelty item but the unimaginably minuscule particles that some theorists think may underlie all matter-his mind is clear: he finds them too ungainly, inelegant. "It's just not the way I'd e.,cpect the answer to be," he observes in his mild British accent. lvhen Penrose says "the answer," one envisions the words in capital letters. He confesses to agreeing with Plato that the truth is embodied in mathematics and e@sts "out there," independent of the physical world and e%-en of human thought. Scientists do not invent the truth-thev discover it. A genuine discovery should do more than merely conform to the facts: it should feel right, it should be beautiful. In this sense, Penrose feels somewhat akin to Einstein, who judged the validity of propositions about the world by asking: Is that the way God would have done it? "Aesthetic qualities are important in science," Penrose remarks, "and necessary, I think, for great science." I interviewed Penrose in September while he was visiting Syracuse University, on leave from his full-time post at the University of Oxford. At 58 he is one of the world's most eminent mathematicians and/or physicists (he cannot decide which category he prefers). He is a "master," says the distinguished physicist John A. Wheeler of Princeton University, at exploiting "the magnificent power of mathematics to reach into everything." An achievement in astrophysics first brought Penrose fame. In the 1960's he collaborated with Stephen W. Hawking of the University of Cambridge in showing that singularities-objects so crushed by their own weight that they become infinitely dense, beyond the ken of classical physics-are not only possible but inevitable under many circumstances. This work helped to push black holes from the outer bmits of astrophysics to the center. In the 1970's Penrose's lifelong passion for geometric puzzles yielded a bonus. He found that as few as two geometric shapes, put together in jigsaw-puzzle fashion, can cover a flat surface in pattems that never repeat themselves. "To a small extent I was thinking about how simpie structures can force complicated arrangements," Penrose says, "but mainly I was doing it for fun;" Called Penrose tiles, the shapes were initially considered a curiosity unrelated to natural phenomena. Then in 1984 a researcher at the National Bureau of Standards discovered a substance whose molecular structure resembles Penrose tiles. This novel form of solid matter, called quasicrystals, has become a major focus of materials research [see "Quasicrystals," by David R. Nelson; Scientific American August, 1986]. Quasicrystals, singularities and almost every other oddity Penrose has puzzled over figure into his current magnum opus, The Emperor's New Mind. The book's ostensible purpose is to refute the view held by some artificial-intelligence enthusiasts that computers wifl someday do all that human brains can doand more.

The reader soon realizes, however, that Penrose's larger goal is to point the way to a grand synthesis of classical physics, quantum physics and even neuropsychology. He begins his argument by slighting computers' ability to mimic the thoughts of a mathematician. At first glance, computers might seem perfectly suited to this endeavor: after all, they were created to calculate. But Penrose points out that Alan M. Turing himself, the original champion of artificial intelligence, demonstrated that many mathematical problems are not susceptible to algorithmic analysis and resolution. The bounds of computability, Penrose says, are related to Godel's theorem, which holds that any mathematical system always contains self-evident truths that cannot be formally proved by the system's initial a)doms. The human mind can comprehend these truths, but a rule-bound computer cannot.

In what sense, then, is the mind unlike a computer? Penrose thinks the answer might have something to do with quantum physics. A system at the quantum level (a group of hydrogen atoms, for instance) does not have a single course of behavior, or state, but a number of different possible states that are somehow "superposed" on one another. When a physicist measures the system, however, all the superposed states collapse into a single state; only one of all the possibilities seems to have occurred. Penrose finds this apparent dependence of quantum physics on human observation-as well as its incompatibility with macroscopic events-profoundly unsatisfying. If the quantum view of reality is absolutely true, he suggests, we should see not a single cricket ball resting on a lawn but a blur of many balls on many lawns. He proposes that a force now conspicuously absent in quantum physics-namely gravity-may link the quantum realm to the classical, deterministic world we humans inhabit. That idea in itself is not new: many theorists-including those trying to weave reality out of supersmngshave sought a theory of quantum gravity.

But Penrose takes a new approach. He notes that as the various superposed states of a quantum-level system evolve over time, the distribution of matter and energy within them begins to diverge. At some level-intermediate between the quantum and classical realms-the differences between the superposed states become gravitationally significant; the states then collapse into the single state that physicists can measure. Seen this way, it is the gravitational influence of the measuring apparatus-and not the abstract presence of an observer that causes the superposed states to collapse. Penrosian quantum gravity can also help account for what are known as non-local effects, in which events in one region affect events in another simultaneously. The famous Einstein-Podoisky-Rosen thought experiment first indicated how nonlocality could occur: if a decaying particle simultaneously emits two photons in opposite directions, then measuring the spin of one photon instantaneously 'fixes" the spin of the other, even if it is light-years away. Penrose thinks quasicrystals may involve nonlocal effects as well. Ordinary crystals, he explains, grow serially, one atom at a time, but the complexity of quasicrystals suggests a more global phenomenon: each atom seems to sense what a number of other atoms are doing as they fall into place in concert. This process resembles that required for laying down Penrose tiles; the proper placement of one tile often depends on the positioning of other tiles that are several tiles removed.

What does all this have to do with consciousness? Penrose proposes that the physiological process underlying a given thought may initially involve a number of superposed quantum states, each of which performs a calculation of sorts. When the differences in the disnibution of mass and energy between the states reach a gravitationally significant level, the states collapse into a single state, causing measurable and possibly nonlocal changes in the neural structure of the brain. This physical event correlates with a mental one: the comprehension of a mathematical theorem, say, or the decision not to tip a waiter.

Schrodinger's cat

Schrodinger's cat

The important thing to remember, Penrose says, is that this quantum process cannot be replicated by any computer now conceived. With apparently genuine humility, Penrose emphasizes that these ideas should not be called theories yet: be prefers the word "suggestions.' But throughout his conversation and writings, he seems to imply that someday humans (not computers) will discover the ultimate answer-to everything. Does he really believe that? Penrose mulls the question over for a moment. "I guess I rather do," he says finally "although perhaps that's being too pessimistic." Why pessimistic? Isn't that the hope of science? "Solving mysteries, or trying to solve them, is wonderful," he replies, "and if they were all solved that would be rather boring."

A Review of The Emperor's New Mind by A K Dewdney Scientific American Dec 89

'Are minds subject to the laws of physics? What, indeed. are the laws of physics?" -Roger Penrose The Emperor's New Mind

Human intelligence outstrips artificial intelligence because it exploits physics at the quantum-mechanical level. That is a provocative position, but one that Roger Penrose, the noted mathematical physicist, leans toward in his new book. Although (as Penrose readily admits) the proposition cannot be rigorously proved at present, the intriguing arguments in The Emperor's New Mind have produced some healthy doubts about the philosophical foundations of artificial intelligence. I shall present Penrose's arguments below-but because this column follows its own compass in charting unknown waters, I shall challenge some of his conclusions and tinker with some of his ideas. In particular, I shall expand the question How do people think? To ask whether human beings will ever know enough to answer such a question. If the universe has an infinite structure, humans may never answer the question fully. An infinite regress of structure, on the other hand, offers some unique computational opposities.

From John Searle's article "Is the Brian's Mind a computer

program?" Scientific American Jan 90.

(a) I satisfy the Turing Test for understanding Chinese. (b) Computer programs

are formal (syntactic). Human minds are mental (semantic).

Before jumping into such matters, I invite readers to explore the recesses of The Emperor's New Mind with me. First, we shall visit the famed Chinese room to inquire whether 'inter-agent' programs understand what they are doing. Next, a brief tour of the Platonic pool hall will bring us face to face with a billiards table that exploits the classical physics of elastic collisions to compute practically anything. Moving along to Erwin Schrodinger's laboratory, we shall inquire after the health of his cat in order to investigate the relation between classical physics and quantum mechanics. Finally, we shall reach our destination: an infinite intelligence able to solve a problem that no ordinary, finite computer could ever hope to conquer. Watching television one evening several years ago, Penrose felt drawn to a BBC program in which proponents of artificial intelligence made what seemed to be a brash claim. They maintained that computers, more or less in their present form, could some day be just as intelligent as humans-perhaps even more so. The claim irritated Penrose. How could the complexities of human intelligence, especially creativity, arise from an algorithm churning away within a computer brain? The extremity of the claims 'goaded' him into the project that led to The Emperor's New Mind. A methodical exploration of compuling theory brought Penrose to criticize one of its philosophical corner-stones, the Turing test. Many computer scientists accept the test as a valid way of distinguishing an intelligent program from a non-intelligent one. In the test, a human interrogator types messages to two hidden subjects, one a person and the other a computer programmed to respond to questions irk an intelligent manner. ff, after a reasonable amount of time, the interrogator cannot tell the difference between the typed responses of the human and those of the computer, then the program has passed the Turing test. Penrose argues that the test provides only indirect evidence for intelligence. After all, what may appear to be an intelligent entity may rum out to be a mockery, just as an object and its mirror image look identical but in otheir details are different. Penrose maintains that a direct method for measurtng intelligence may require more than a simple Turing test. To strengthen his argument, Penrose wanders into the Chinese room, a peculiar variation of the Turing test invented by philosopher John R. Searle. (Scientific Amersicn Jan 1990). A human interrogator stands outside a room that only allows the entrance and exit of paper messages. The interrogator types out a story and related questions and sends them into the room. The twist: all messages that go into and out of the room are typed in Chinese characters.

To make matters even more bizarre, a person inside the room executes a program that responds to the story by answering questions about it. This person exactly replaces the computer hardware. The task would be tedious and boring but, once the rules of execution were teamed, rather straightforward. To guarantee the ignorance of the human hardware, he or she has no knowledge whatsoever of Chinese. Yet the Chinese room seems to understand the story and responds to the questions intelligently. The upshot of the exercise, as far as Penrose is concemed, is that "the mere carrying out of a successful algorithm does not in itself imply that any understanding has taken place." ths conclusion certainly holds if it is directed at the executing apparatus, whether flesh or hardware. After all, whether the program happens to be executed by a human or by a computer makes no difference, in principle, to the outcome of the program's interaction with the outside world. But for this very reason the human in the Chinese room is something of a straw man: no one would fault a program because the hardware fails to understand what the program is all about. To put the point even more strongly, no one would be critical of a neuron for not understanding the significance of the pulse pattems that come and go. This would be true whether or not the neuron happened to be executing part of an algorithm or doing something far more sophisticated. Any strength in claims for artificial intelligence must surely lie in the algorithm itself. And that is where Penrose attacks next. The world of algorithms is essentially the world of the computable. In Penrose's words, algorithms constitute "a very narrow and limited part of mathematics." Penrose believes (as I and many other mathematicians do) in a kind of Platonic reality inhabited by mathematical objects. Our clue to the independent existence of such objects lies in our complete inability to change them. They are just "there," like mountains or oceans.

Penrose cites the Mandelbrot set as an example. The Mandelbrot set was not "invented" by Benoit B. Mandelbrot, the renowned IBM research fellow, but was discovered by him, like the planet Neptune, the set existed long before any human set eyes on it and recognized its significance. The Mandelbrot set carries an important message for those who imagine it to be a creature of the computer. lt is not. The Mandelbrot set cannot even be computed! Do I hear howls of outrage? Strictly speaking, Penrose is right. The Mandelbrot set, while it is but one landmark in the Platonic world, lies somewhat distant from algorithniic explorers. Readers may recall that points in the interior of the set can be found by an iterative process: a complex number is squared and then the result, z1 is squared and added to c, then the second result, z2 is squared and added to c and so on. If the succession of z values thus produced never patters off into infinity, then c belongs to the set's interior. But here a grave question emerges. How long does one have to wait to decide whether the sequence of values remains bounded? The answer is, essentially, forever! In practice one interposes a cutoff to the computation. In doing so one inevitably includes a few points that do not belong in the set because it takes longer for the sequence based an such points to diverge.

Difficulties in computing the Mandelbrot set pale in comparison with other limitations on the algorithmic adventure. For example, mathematics itself is formally considered to be built of axiom systems. Set forth a modest coflection of aidoms, a rule of inference or two-and one is in business. A conceptual algorithm called the British Museum Algorithm generates all the theorems that are provable within the formal systems of axioms and inference rules. Unfortunately, the theorems thus produced do not necessarily include all truths of the system. This discovery, by the mathematician Kurt Godel, dashed hopes of mechanizing all of mathematics. Penrose takes Godel's famous theorem as evidence that human intelligence can transcend the algorithmic method: "... a clear consequence of the Godel argument [is] that the concept of mathematical truth cannot be encapsulated in any formal scheme." How then could Godel's theorem itself be the result of an algorithm? I do not know the answer to this question, but I have seen the point raised before. It does seem, however, that Godel's theorem might be derived tmm other axioms and rules of inference and therefore could be produced by an algorithm. The theorem might be just part of a never-ending stream of metatheorems. I should be grateful to hear from knowledgeable readers who can set me straight on this point.

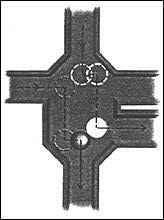

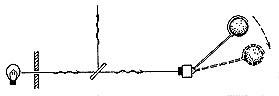

A billiard-ball AND gate.

Whatever one's opinion on such questions, The Emperor's New Mind attacks the claims of artificial inteuigence on another front: the physics of computing. Penrose hints that the real home of computing lies more in the tangible world of classical mechanics than in the imponderable realm of quantum mechanics. The modem computer is a deterministic system that for the most part simply executes algorithms. In a somewhat jolly fashion Penrose takes a billiard table, the scene of so many classical encounters, as the appropriate framework for a computer in the classical mold. By reconfiguring the boundaries of a billiard table, one might make a computer in which the billiard balls act as message carriers and their interactions act as logical decisions. The billiard-ball computer was first designed some years ago by the computer scientists Edward Fredkin and Tommaso Toffoli of the Massachusetts institute of Technology. The reader can appreciate the simplicity and power of a billiard-ball computer by examining the diagram. The diagram depicts a billiard-ball logic device. Two in-channels admit moving balls into a special chamber, which has three out-channels. If just one ball enters the chamber from either in-channel, it will leave by either the bottom out-channel or the one at the upper right. If two balls enter the chamber at the same time, however, one of them will leave by the out-cnannel at the lower right. The presence or absence of a bam in this particular out-channel signals the logical function known as an AND gate. The output is a ball if, and only if, a ball enters one in-channel and the other one. A computer can be built out of this particular gate type and just one other, a chamber in which a ball leaves by a particular channel if, and only if, a ball does not enter by another channel. Readers may enjoy trying to design such a chamber, bearing in mind that additional balls might be helpful in the enterprise. Everyone appreciates the smooth, classical motions of a billiard ball. It has other desirable properties that are hardly given a second thought. For example, no one ever has to worry whether a billiard ball is in two places at the same time.

Quantum mechanics, however, produces such anxieties. Quantum systems such as the famous two-slit experiment leave open the possibility that a photon can be in two places at once. Briefly, when photons pass through a double slit, they can be regarded as waves that interfere with themselves. -An interference pattern emerges on a screen behind the slits unless one places a detector at either slit. The act of observation forces the photon to decide, in effect, which hole it wm pass through! The phenomenon is called a state vector collapse. The experiment can be extended to an observation that takes place at either of two sites that are a kilometer (even a light-year) apart. The photon can decide which slit it will pass through, many physicists claim. only if it is effectively in both places at once. At what point in the continuum of scales, from the atomic to the galactic, does a quantum-mechanical system become a classical one? The dilemma is illustrated by Schrodinger's famous cat. In this gedanken (thought) experiment, a scientist who has no fear of animal-lights activists places a cat and a vial of toxic gas in a room that contains a laser, a half-silvered mirror, a light detector and a hammer. When the room is sealed, the laser emits a photon toward the mirror. if the photon passes through the niirror. no harm comes to the cat. But if the photon is reflected in the mirror, it hits the detector, which activates the hammer, which smashes the vial, which contains the gas, which kills the cat. From outside the room one cannot know whether the cat lives or dies. In the quantum-mechanical world, the two possible events coexist as superimposed realities. But in the classical world, only one event or the other may occur. The state vector (and possibly the cat) must collapse. Penrose suggests that current theory lacks a way of treating the middle ground between classical physics and quantum mechanics. The theory is split in two but it should be seamless on a grand scale. ) Perhaps the synthesis will come from an area known as quantum gravity.

Now back, finally, to the human mind. For Penrose, consciousness has a non-algorithmic ingredient. At the quantum level, different alternatives can coexist. A single quantum state could in principle consist of a large number of different, simultaneous activities. Is the human brain somehow able to exploit this phenomenon? I can hardly explore this eerie possibility as well as Penrose does. Readers intrigued by the thought had best buy the book. I was inspired. however, to investigate a related question. Could human beings quantify their own intelligence in a universe whose structure goes on forever? An end to the structure of matter, either as an ultimate particle or a set of particles, seems inconceivable. By this I mean not just particles but any structure, whether energetic or even purely informational, underly ing the phenomena in question. It seems to me that physics itself may be an infinite enterprise for the sample reason that as soon as some 'ultimate' structure is discovered, explaining the existence of the 'ultimate' laws becomes the next problem. In any event, I would prefer to live in an infinitely structured universe. For one thing, our minds might turn out to be much more powerful than if the structure went only so far. Computers are constructed to rule out the influence of any physical process below a certain scale of size. The algorithm must be protected from errors." Our brains may or may not be so structured, as Penrose points out. Physical events at the atomic level might well have an important role to play in thought formation. Processes at the molecular level certainly do. One has only to think of the influence of neuro-transmitter molecules on the behavior of a neuron. Furthermore, it is a well-known characteristic of nature to take advantage of physical possibilities in the deployment of biological operations. If physical structures extend to a certain level, is there some a priori reason to believe that the brain must automatically be excluded from exploiting it? What if the brain could exploit an levels of structure in an infinitely structured universe?

A fractal computer solves Thue's word problem

A fractal computer solves Thue's word problem

To demonstrate in the crudest imaginable way the potential powers of an infinite brain, I have designed an infinite computer that exploits the structure at all levels. For the purposes of the demonstration, I will pretend that the structure is classical at all scales. My infinite computer (see illustration) is essentially a square that contains two rectangles and two other, smaller squares. An input wire enters the big square from the left and passes immediately into the first rectangle. This represents a signal-processing device that I call a substitution module. The substitution module sends a wire to each of the two small squares and also to the other rectangle, henceforth called a message module. The structure of the whole computer regresses infinitely. Each of the two smaller squares is an exact duplicate of the large square, but at half the scale. When a signal is propagated through the wires and modules at half the scale, it takes only half as long to traverse the distances involved, and so the substitution and message modules operate twice as fast as the corresponding modules one level up. The infinite computer solves the famous "word problem" invented by the mathematician Axel Thue. In this problem, one is given two words and a dictionary of allowed substitutions. Can one, by substitutions alone, get from the first word to the second one? Here is an example of the problem: suppose the first word is 100101110 and the second is 01011101110. Can one change the first word into the second by substituting 010 for 110, 10 for 111 and 100 for OOI? Tbe example is chosen at random. I deliberately refrain from attempting to solve it. It might happen that no sequence of substitutions will transform the first word into the second. On the other hand, there might be a sequence of substitutions that does the job. In the course of these substitutions, intermediate words might develop that are very long. Therein lies the problerm. As with certain points in the Mandelbrot set, one cannot effectively decide the answer. There is no algorithm for the problem, because an algorithm must terminate, by definition The danger Is that the algorithm might terminate before the question is decided. Thue's word problem is called undecidable for this reason. No computer program, even in principle, can solve all instances of this problem. Enter the infinite computer. The target word is given to the computer through the main input wire. It enters the first substitution module in 1/4 second. The word is then transmitted by the substitution module to the two substitution modules at the next level. But this transmission takes only 1/8 second. Transmission to subsequent levels takes 1/16, then 1/32 second and so on. The total time for all substitution modules to become 'loaded" with the target word is therefore half a second. Next, the three (or however many) substitution formulas are fed into the computer by the same process and at the same speed. This time, however, the various substitution modules at different levels are preprogrammed to accept only certain substitutions in the sequence as their own, and they are also preprogrammed always to attempt a substitution at a specific place in a word that arrives from a higher level. A recital of the distribution scheme for farming out the substitutions and their places would probably try the reader's patience, and so I shall omit it. This should not prevent those who enjoy infinite excursions from imagining how it might be managed. The computation begins when one sends the first word into the computer. The first substitution module attempts to make its own substitution at its allotted place in the incoming word. If the substitution cannot be made in the required place, the substitution module transmits the word to the lower square in the next level down; if it succeeds in making the substitution, it transmits the transformed word to the upper square. If the substitution succeeds and the newly produced word matches the target word stored in the substitution module's memory. then the module sends a special signal to the message module: 'success.' Each square at each level operates exactly in this manner. As I indicated earlier, it is possible to distribute substitutions (and places at which they are to be attempted) throughout the infinite computer in such a way that the word problem win always be solved. The question takes at most one second to decide in all cases: half a second for the computation to proceed all the way down to infinitesimal modules and half a second lor the message 'success' to reach the main output wire. If no substitution sequence exists, the absence of a message after one second may be taken as a 'no" answer. Readers may enjoy pondering the infinite computer while exploiting the many (perhaps infinite) structures of their own brain.

Roger Penrose on Gravitational Reduction

of the Wave Packet Part 1:

From: The Emperor's New Mind

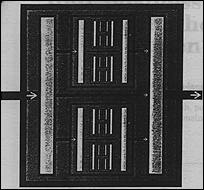

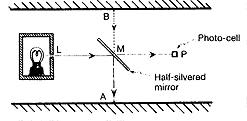

Fig. 8.3. Time-irreversibility of R in a simple quantum experiment.

The probability that the photo-cell detects a photon given that the source

emits one is exactly one-half; but the probability that the source has emitted

a photon given that the photo-cell detects one is certainly not one-half.

Let us see this in a very simple specific case. Suppose that we have a lamp L and a photo-cell (i.e. a photon detector) P. Between L and P we have a half-silvered mirror M, which is tilted at aii angle, say 45', to the line from L to P (see Fig. 8.3). Suppose that ttie lanip emits occasional photons from time to time, in some random fashion, and that the construction of the lamp (one could use parabolic mirrors) is such that these photons are always aimed very carefully at P. Whenever the photo-cell receives a photon it registers this fact, and we assume that it is 100 per cent reliable. It may also be assumed that whenever a photon is emitted, this fact is recorded at L, again with 100 per cent reliability. (There is no conflict with quantum-mechanical principles in any of these ideal requirements, though there might be difficulties in approaching such efficiency in practice.)

The half-silvered mirrof M is such that it reflects exactly one-half

of the photons that reach it and transmits the otlier half. More correctly,

we must think of this quantum-mechanically. The photon's wavefunction impinges

on the mirror and splits in two. There is an amplitude of ![]() for the reflected

part of the wave and

for the reflected

part of the wave and ![]() for the transmitted part. Both parts must be considered

to 'coexist' (in the normal forward-time description) until the moment that

an 'observation' is deemed to have Keen made. At that point these coexisting

alternatives resolve themselves into actual alternatives - one or the other-with

probabilities given by the squares of the (moduli of) these amplitudes,

namely

for the transmitted part. Both parts must be considered

to 'coexist' (in the normal forward-time description) until the moment that

an 'observation' is deemed to have Keen made. At that point these coexisting

alternatives resolve themselves into actual alternatives - one or the other-with

probabilities given by the squares of the (moduli of) these amplitudes,

namely ![]() 2 = 1/2, in each case. When the observation is made, the probabilities

that the photon was reflected or transmitted turn out both to have been

indeed one-half. Let us see how this applies in our actual experiment. Suppose

that L is recorded as having emitted a photon. The photon's wavefunction

splits at the mirror, and it reaches P with amplitude

2 = 1/2, in each case. When the observation is made, the probabilities

that the photon was reflected or transmitted turn out both to have been

indeed one-half. Let us see how this applies in our actual experiment. Suppose

that L is recorded as having emitted a photon. The photon's wavefunction

splits at the mirror, and it reaches P with amplitude ![]() , so the photo-cell

registers or does not register, each with probability one-half. The other

part of the photon's wavefunction reaches a point A on the laboratory wall

(see Fig. 8.3), again with amplitude

, so the photo-cell

registers or does not register, each with probability one-half. The other

part of the photon's wavefunction reaches a point A on the laboratory wall

(see Fig. 8.3), again with amplitude ![]() If P does not register, then the

photon must be considered to have hit the wall at A. For, had we placed

another photo-cell at A, then it would always register whenever P does not

register-assuming that L has indeed recorded the emission of a photon-and

it would not register whenever- P does register. In this sense, it is not

necessary to place a photo-cell at A. We can infer what the photo-cell at

A would have done, had it been there, merely by looking at L and P.

If P does not register, then the

photon must be considered to have hit the wall at A. For, had we placed

another photo-cell at A, then it would always register whenever P does not

register-assuming that L has indeed recorded the emission of a photon-and

it would not register whenever- P does register. In this sense, it is not

necessary to place a photo-cell at A. We can infer what the photo-cell at

A would have done, had it been there, merely by looking at L and P.

It should be clear how the quantum-mechanical calculation proceeds. We

ask the question: 'Given that 1- registers, what is the probability that

P registers?' To answer it, we note that there is an amplitlide ![]() for

the photon traversing the path LMP and an amplitude

for

the photon traversing the path LMP and an amplitude ![]() for it traversing

the path LMA. Squaring, we find the respective probabilities 1/2 and 1/2

for it reaching P and reaching A. The quantum-mechanical answer to our question

is thereforeone half.

for it traversing

the path LMA. Squaring, we find the respective probabilities 1/2 and 1/2

for it reaching P and reaching A. The quantum-mechanical answer to our question

is thereforeone half.

Experimentally, this is indeed the answer that one would obtain. We could

equally well use the eccentric 'reverse-time' procedure to obtain the same

answer. Suppose that we note that P has registered. We consider a backwards

time wave function for the photon, assuming that the photon finally reaches

P. As we trace backwards in time, the photon goes back from P untilit reaches

the mirror M. At this point, the wavefunction bifurcates, and there is a

![]() amplitude for it to reach the lamp at L, and a

amplitude for it to reach the lamp at L, and a ![]() amplitude for

it to be reflected at M to reach another point on the laboratory wall, namely

B in Fig. 8,3. Squaring, we again get one-half for the two probabilities.

But we must be careful to note what questions these probabilities are tile

answers to. They are the two questions, 'Given that L registers, what is

the probability that P registers'?', just as before, and the more eccentric

question, 'Given that the photon is ejected from the wall at B, what is

the probability that P registers'?' We may consider that both these answers

are, in ,i sense, experimentally 'correct', though the second one (ejection

from the wall) would be an inference rather than the result of an actual

series of experiments! However, neither of these questions is the time-reverse

question of the one we asked before. That would be: 'Given that P registers,

what is the probability that L registers'?' We note that the correct experimental

answer to this question is not 'one-half' at all, but one.'

amplitude for

it to be reflected at M to reach another point on the laboratory wall, namely

B in Fig. 8,3. Squaring, we again get one-half for the two probabilities.

But we must be careful to note what questions these probabilities are tile

answers to. They are the two questions, 'Given that L registers, what is

the probability that P registers'?', just as before, and the more eccentric

question, 'Given that the photon is ejected from the wall at B, what is

the probability that P registers'?' We may consider that both these answers

are, in ,i sense, experimentally 'correct', though the second one (ejection

from the wall) would be an inference rather than the result of an actual

series of experiments! However, neither of these questions is the time-reverse

question of the one we asked before. That would be: 'Given that P registers,

what is the probability that L registers'?' We note that the correct experimental

answer to this question is not 'one-half' at all, but one.'

If the photo-cell indeed registers, then it is virtually certain that the photon came from the lamp and not from the laboratory wall! In the case of our time-reversed question, the quantum-mechanical calculation has given us completely the wrong answer!

When does the state-vector reduce?

Suppose that we accept, on the basis of the preceding arguments, that the reduction of the state-vector might somehow be ultimately a gravitational phenomenon. Can the connections between R and gravity be made more explicit'? When, on the basis of this view, should a state-vector collapse actually take place? I should first point out that even with the more 'conventional' approaches to a quantum gravity theory there are some serious tachnical difficulties involved with bringing the principles of general relativity to bear on the rules of quantum theory. These rules (primarily the way in which momenta are re-interpreted as differentiation with respect to position, in the expression for Schrödinger's equation, cf. p. 288) do not fit in at all well with the ideas of curved space-time geometry. My own point of view is that its soon as a significant amount of space-time curvature is introduced, the rules of quantum linear superposition must fail. It is here that the complex-amplitude superpositions of potentially alternative states become replaced by probability weighted actual alternatives-and one of the alternatives indeed actually takes place. What do I mean by a 'significant' amount of curvature? I mean that the level has been reached where the measure of curvature introduced has about the scale of one graviton or more. (Recall that, according to the rules of quantum-theory, the electromagnetic field is 'quantized' into individual units, called 'photons'. When the field is decomposed into its individual frequencies, the part of frequency v can come only in whole numbers of photons, each of energy hv. Similar rules should presumably apply to the gravitational field.) One graviton would be the smallest unit of curvature that would be allowed according to the quantum theory. The idea is that, as soon as this level is reached, the ordinary rules of linear superposition, according to the U procedure, become modified when applied to gravitons, and some kind of time-asymmetric 'non-linear instability' sets in. Rather than having coniplex linear spuerpositions of alternatives coexisting however, one of the alternatives begins to win out at this stage, and the system 'flops' into one alternative or the other. Perhaps the choice of alternative is just made by chance, or perhaps there is something deeper underlying this choice. But now, reality has beconie one or the other. The procedure R has been effected. Note that, according to this idea, the procedure R occurs spontaneously in an entirely objective way, independent of any human intervention. The idea is that the 'one-graviton' level should lie comfortably between the 'quantum level', of atoms, molecules, etc. where the linear rules (U) of ordinary quantum theory hold good, and the 'classical level' of our everyday experiences. How 'big' is the one-graviton level'? It should be emphasized that it is not really a question of physical size: it is more a question of mass and energy distribution. We have seen that quantum interference effects can occur over large distances provided that not much energy is involved. The characteristic quantum-gravitational scale of mass is what is known as the Planck mass mp = 10^-5 grams (approximately). This would seem to be rather larger than one would wish, since objects a good deal less massive than this, such as specks of dust, can be directly perceived as behaving in a classical way. (The mass m, is a little less than that of a flea.) however, I do not think that the one-graviton criterion would apply quite as crudely as this. I shall try to be a little more explicit, but at the time of writing there remain many obscurities and ambiguities as to how this criterion is to be precisely applied. First let us consider a very direct kind of way in which a particle can be observed, namely by use of a Wilson cloud chamber. Here one has a chamber full of vapour which is just on the point of condensing into droplets. When a rapidly moving charged particle enters this chamber, having been produced, say, by the decay of a radioactive atom situated outside the chamber, its passage through the vapour causes some atoms close to its path to become ionized (i.e. charged, owing to electrons being dislodged). These ionized atoms act as centres along which little droplets condense out of the vapour. In this way, we get a track of droplets which the experimenter can directly observe. Now, what is the quantum-mechanical description of this'? At the moment that our radioactive atom decays, it emits a particle. But there are many possible directions in which this emitted particle might travel. There will be an amplitude for this direction, an amplitude for that direction, and an amplitude for etch other direction, all these occurring simultaneously in quantum linear superposition. The totality of all these superposed alternatives will constitute a spherical wave emanating from the decayed atom: the wave function of the emitted particle.

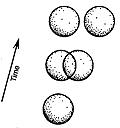

A charged particle entering a Wilson cloud chamber and causing

a string of droplets to condense.

As each possible particle track enters the cloud chamber it becomes associated with a string of ionized atoms each of which begins to act as a centre of condensation for the vapour. All these different possible strings of ionized atoms niust also coexist in quantum linear superposition, so we now have a linear superposition of a large number of different strings of condensing droplets. At some stage, this complex quantum linear superposition becomes a real probability-weighted collection of actual alternatives, as the coniplex aniplitlide-weightings have their moduli squared according to the procedure R. Only otie of these is realized in the actual physical world of experience, and this particular alternative is the one observed by the experimenter. According to the viewpoint that I am proposing, that stage occurs as soon as the difference between the gravitational fields of the various alternatives reaches the one-graviton level. When does this happen? According to a very rough calculation, if there had been just one completely uniform spherical droplet, then the one-graviton stage would be reached when the droplet grows to about one hundredth of mp, which is one ten-millionth of a gram. There are many uncertainties in this calculation (including some difficulties of principle), and the size is a bit large for comfort, but the result is not totally unreasonable. It is to be hoped that some more precise results will be forthcoming later, and that it will be possible to treat a whole string of droplets rather than just a single droplet. Also there may be soine significant differences when the fact is taken into account that the droplets are composed of a very large number of tiny atoms, rather than being totally uniform. Moreover, the 'one-graviton' criterion itself needs to be made considerably more mathematically precise. In the above situation, I have considered what might be an actual observation of a quantum process (the decay of a radioactive atom) where quantum effects have been magnified to the point at which the different quantum alternatives produce different, directly observable, macroscopic alternatives. It would be my view that R can take place objectively even when such a manifest magnification is not present. Suppose that rather than entering a cloud chamber, our particle simply enters a large box ot gas (or fluid), of such density that it is practically certain that it will collide with, or otherwise disturb, a large number of the atoms in the gas. Let us consider just two of the alternatives for the particle, as part of the initial complex linear superposition: it might not enter the box at all, or it might enter along a particular path and ricochet off some atom of gas. In the second case, that gas atom will then move off at great speed, in a way that it would not have done otherwise had the particle not run into it, and it will subsequently collide with, and itself ricochet off, some further atom. The two atoms will each then move off in ways that they would not have done otherwise, and soon there will be a cascade of movemient of atoms in the gas which would not have happened had the particle not initially entered the box. Before long, in this second case, virtually every atom in the gas will have become disturbed by this motion. Now think of how we would have to describe this quantum mechanically. Initially it is only the original particle whose different positions must be considered to occur in complex linear superposition-as part of the particle's wave function.

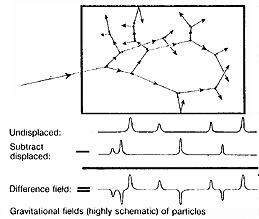

Fig. 8.8. If a particle enters a large box of some gas, then

before Iong virtually every atom of the gas will have been disturbed. A

quantum olinear superposition of the particle entering, and of the particle

not entering, would involve the linear superposition of two different space-time

geometries, describing the gravitational fields of the two arriingenients

of gas particles. When does the difference between these geometries reach

the one-graviton level'?

But after a short while, all the atoms of the gas must be involved. Consider the complex superposition of two paths that might be taken by the particle, one entering the box and the other not. Standard quantum mechanics insists that we extend this superposition to all the atoms in the gas: we must superpose two states, where the gas atoms in one state are all moved from their positions in the other state. Now consider the difference in the gravitational fields of the totality of individual atoms. Even though the overall distribution of gas is virtually the same in the two states to be superposed (and the overall gravitational fields would be practically identical), if we subtract one field from the other, we get a (highly oscillating) difference field that might well be 'significant' in the sense that I mean it here-where the one-graviton level for this difference field is easily exceeded. As soon as this level is reached, then state-vector reduction takes place: in the actual state of the system, either the particle has entered the box of gas or it has not. The complex linear superposition has reduced to statistically weighted alternatives, and only one of these actually takes place. In the previous example, I considered a cloud chamber as a way of providing a quantum-mechanical observation. lt seems to me to be likely that other types of such observation (photographic plates, spark chambers, etc.) can be treated using the 'one-graviton criterion' by approaching them in the way that I have indicated for the box of gas above. Much work remains to be done in seeing how this procedure might apply in detail. So far, this is only the germ of an idea for what I believe to be a much-needed new theory. Any totally satisfactory scheme would, I believe, have to involve some very radical new ideas about the nature of space-time geometry, probably involving an essentially non-local description. One of the most compelling reasons for believing this comes froni the EPR-type experiments, where an 'observation' (here, the registering of a photo-cell) at one end of a room can effect the simultaneous reduction of the state vector at the other end. The construction of a fully objective theory of state-vector reduction which is consistent with the spirit of relativity is a profound challenge, since 'simultaneity' is a concept which is foreign to relativity, being dependent upon the motion of some observer. It is my opinion that our present picture of physical reality, particularly in relation to the nature of time, is due for a grand shake up-even greater, perhaps, than that which has already been provided by present-day relativity and quantum mechanics. We must come back to our original question. How does all this relate to the physics which governs the actions of our brains? What could it have to do with our thoughts and with our feelings? To attempt some kind of answer, it will be necessary first to examine something of how our brains are actually constructed. I shall return afterwards to what I believe to be the fundamental cluestion: what kind of new physical action is likely to be involved when we consciously think or perceive?

Roger Penrose on Gravitational Reduction

of the Wave Packet Part 2:

From: Shadows of theMind

Quantum theory and reality

6.10 Gravitationally induced state-vector reduction?

There are strong reasons for suspecting that the modification of quantum theory that will be needed, if some form of R is to be made into a real physical process, must involve the effects of gravity in a serious way. Some of these reasons have to do with the fact that the very framework of standard quantum theory fits most uncomfortably with the curved-space notions that Einstein's theory of gravity demands. Even such concepts as energy and time-basic to the very procedures of quantum theory cannot, in a completely general gravitational context, be precisely defined consistently with the normal requirements of standard quantum theory. Recall, also, the light-cone 'tilting' effect that is unique to the physical phenomenon of gravity. One might expect, accordingly, that some modification of the'basic principles of quantum theory might arise as a feature of its (eventual) appropriate union with Einstein's general relativity.

Yet most physicists seem reluctant to accept the possibility that it might be the quantum theory that requires modification for such a union to be successful. Instead, they argue, Einstein's theory itself should be modified. They may point, quite correctly, to the fact that classical general relativity has its own problems, since it leads to space-time singularities, such as are encountered in black holes and the big bang, where curvatures mount to infinity and the very notions of space and time cease to have validity. I do not myself doubt that general relativity must itself be modified when it is appropriately unified with quantum theory. And this will indeed be important for the understanding of what actually takes place in those regions that we presently describe as 'singularities'. But it does not absolve quantum theory from a need for change. We saw that general relativity is an extraordinarily accurate theory-no less accurate than is quantum theory itself. Most of the physical insights that underlie Einstein's theory will surely survive, not less than will most of those of quantum theory, when the appropriate union that moulds these two great theories together is finally found. Many who might agree with this, however, would still argue that the relevant scales in which any form of quantum gravity might become relevant would be totally inappropriate for the quantum measurement problem. They would point to the length scale that characterizes quantum gravity, called the Planck scale, of IO^-33 cm, which is some 20 orders of magnitude smaller even than a nuclear particle; and they would severely question how the physics at such tiny distances could have anything to do with the measurement problem which, after all, is concerned with phenomena that (at least) border upon the macroscopic domain.

Fig. 6.4. Instead of having a cat, the measurement could consist

of the simple movement of a spherical lump. How big or massive must the

lump be, or how far must it move, for R to take place?

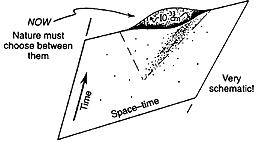

However, there is a misconception here as to how quantum gravity ideas might be applied. For 10^-33 cm is indeed relevant, but not in the way that first comes to mind. Let us consider a type of situation, somewhat like that of Schrödinger's cat, in which one strives to produce a state in which a pair of macroscopically distinguishable alternatives are linearly superposed. For example, in Fig. 6.4 such a situation is depicted, where a photon impinges upon a half-silvered mirror, the photon state becoming a linear superposition of a transmitted and reflected part. The transmitted part of the photon's wavefunction activates (or would activate) a device which moves a macroscopic spherical lump (rather than a cat) from one spatial location to another. So long as Schrödinger evolution U holds good, the 'location' of the lump involves a quantum superposition of its being in the original position with its being in the displaced position. If R were to come into effect as a real physical process, then the lump would'jump'either to one position or to the other-and this would constitute. an actual'measurement'. The idea here is that, as with the GRW theory, this is indeed an entirely objective physical process, and it would occur whenever the mass of the lump is large enough or the distance it moves, far enough. (In particular, it would have nothing to do with whether or not a conscious being might happen to have actually 'perceived' the movemen t, or otherwise, of the lump.) I am imagining that the device that detects the photon and moves the lump is itself small enough that it can be treated entirely quantum- mechanically, and it is only the lump that registers the measurement. For example, in an extreme case, we might simply imagine that the lump is poised sufficiently unstably that the mere impact of the photon would be enough to cause it to move away significantly. Applying the standard U-procedures of quantum mechanics, we find that the photon's state, after it has encountered the mirror, would consist of two parts in two very different locations. One of these parts then becomes entangled with the device and finally with the lump, so we have a quantum state which involves a linear superposition of two quite different positions for the lump. Now the lump will have its gravitational field, which must also be involved in this superposition. Thus, the state involves a superposition of two different gravitational fields. According to Einstein's theory, this implies that we have two different space-time geometries superposed! The question is: is there a point at which the two geometries become sufficiently different from each other that the rules of quantum mechanics must change, and rather than forcing the different geometries into superposition, Nature chooses between one or the other of them and actually effects some kind of reduction procedure resembling R?

The point is that we really have no conception of how to consider linear superpositions of states when the states themselves involve different space- time geometries. A fundamental difficulty with 'standard theory' is that when the geometries become significantly different from each other, we have no absolute means of identifying a point in one geometry with any particular point in the other-the two geometrie's are strictly separate spaces-so the very idea that one could form a superposition of the matter states within these two separate spaces becomes profoundly obscure. Now, we should ask when are two geometries to be considered as actually significantly different' from one another? It is here, in effect, that the Planck scale of 10-33 cm comes in. The argument would roughly be that the scale of the difference between these geometries has to be, in an a propriate sense, something like 10-11 cm or more for reduction to take place. We might, for example, attempt to imagine (Fig. 6.5) that these two geometries are trying to be forced into coincidence, but when the measure of the difference becomes too large, on this kind of scale, reduction R takes place so, rather than the superposition involved in U being maintained, Nature must choose one geometry or the other.

What kind of scale of mass or of distance moved would such a tiny change in geometry correspond to? In fact, owing to the smallness of gravitational effects, this turns out to be quite large, and not at all unreasonable as a demarcation line between the quantum and classical levels. In order to get a feeling for such matters, it will be useful to say something about absolute (or Planckian) units.

Fig. 6.5. What is the relevance of the Planck scale of 10

cm to quantum state reduction? Rough idea: when there is sufficient mass

movement between the two states under superposition such that the two resulting

space-times differ by something of the order of IO ^-33 cm.

6.12 The new criterion

I shall now give a new criterion for gravitationally induced state-vector reduction that differs significantly from that suggested in ENM, but which is close to some recent ideas due to Di6si and others. The motivations for a link between gravity and the R-procedure, as given in ENM, still hold good, but the suggestion that I am now making has some additional theoretical support from other directions. Moreover, it is free of some of the conceptual problems involved in the earlier definition, and it is much easier to use. The proposal in ENM was for a criterion, according to which two states might bejudged (with regard to their respective gravitational fields-i.e. their respective space- times) to be too different from one another for them to be able to co-exist in quantum linear superposition. Accordingly, R would have to take place at that stage. The present idea is a little different. We do not seek an absolute measure of gravitational difference betweein states which determines when the states differ too much from each other for superposition to be possible. Instead, we regard superposed widely differing states as unstable-rather like an unstable uranium nucleus, for example-and we ask that there be a rate of state-vector reduction determined by such a difference measure. The greater the difference, the faster would be the rate at which reduction takes place. For clarity, I shall first apply the new criterion in the particular situation that was described in 6.10 above, but it can also be easily generalized to cover many other examples. Specifically, we consider the energy that it would take, in the situation above, to displace one instance of the lump away from the other, taking just gravitational effects into account. Thus, we imagine that we have two lumps (masses), initially in coincidence and interpenetrating one another (Fig. 6.6), and we then imagine moving one instance of the lump away from the other, slowly, reducing the degree of interpenetration as we go, until the two reach the state of separation that occurs in the superposed state under consideration. Taking the reciprocal of the gravitational energy that this operation would cost us, measured in absolute units,* we get the approximate time, also in absolute units, that it would take before state reduction occurs, whereupon the lump's superposed state would spontaneously jump into one localized state or the other.

*We may prefer to express this reduction time in more usual units than the absolute units adopted here. In fact, the expression for the reduction time is simply hIE, where E is the gravitational separation energy referred to above, with no other absolute constant appearing other than h. The fact that the speed of light c does not feature suggests that a 'Newtonian' model theory of this nature would be worth investigating, as has been done by Christian (1994).

Fig. 6.6. To compute the reduction time h/E, imagine moving

one instance of the lump away from the other and compute the energy E that

this would cost, taking into account only their gravitational attraction.

If we take the lump to be spherical, with mass m and radius a, we obtain

a quantity of the general order of m'la for this energy. In fact the actual

value of the energy depends upon how far the lump is moved away, but this

distance is not very significant provided that the two instances of the

lump do not (much) overlap when they reach their final displacement. The

additional energy that would be required in moving away from the contact

position, even all the way out to infinity, is of the same order (I times

as much) as that involved in moving from coincidence to the contact position.

Thus, as far as orders of magnitude are concerned, one can ignore the contribution

due to the displacement of the lumps away from each other after separation,

provided that they actually (essentially) separate. The reduction time,

according to this scheme, is of the order of![]()

measured in absolute units, or, very roughly,![]() where rho is the density

of the lump. This gives about 10^186/a^5, for something of ordinary density

(say, a water droplet). It is reassuring that this provides very'reasonable'

answers in certain simple situations. For example, in the case of a nucleon

(neutron or proton), where we take a to be its 'strong interaction size'

10^-13 cm, which in absolute units is nearly 10^20, and we take m to be

about 10^19, we get a reduction time of nearly 10^58, which is over ten

million years. It is reassuring that this time is large, because quantum

interference effects have been directly observed for individual neutrons.'

Had we obtained a very short reduction time, this would have led to conflict

with such observations.

where rho is the density

of the lump. This gives about 10^186/a^5, for something of ordinary density

(say, a water droplet). It is reassuring that this provides very'reasonable'

answers in certain simple situations. For example, in the case of a nucleon

(neutron or proton), where we take a to be its 'strong interaction size'

10^-13 cm, which in absolute units is nearly 10^20, and we take m to be

about 10^19, we get a reduction time of nearly 10^58, which is over ten

million years. It is reassuring that this time is large, because quantum

interference effects have been directly observed for individual neutrons.'

Had we obtained a very short reduction time, this would have led to conflict

with such observations.

Fig. 6.7. Suppose that instead of moving a lump, the transmitted

part of the photon's state is simply absorbed in a body of fluid matter.

If we consider something more 'macroscopic', say a minute speck of water of radius 10^-5 cm, we get a reduction time measured in hours; if the speck were of radius 10^-4 cm (a micron), the reduction time, according to this scheme, would be about a twentieth of a second; if of radius 10^-3 cm, then less than a millionth of a second. In general, when we consider an object in a superposition of two spatially displaced states, we simply ask for the energy that it would take to effect this displacement, considering only the gravitational interaction between the two. The reciprocal of this energy measures a kind of 'half-life' for the superposed state. The larger this energy, the shorter would be the time that the superposed state could persist. In an actual experimental situation, it would, be very hard to keep the quantum-superposed lumps from disturbing-and becoming entangled with-the material in the surrounding environment, in which case we would have to consider the gravitational effects involved in this environment also. This would be relevant even if the disturbance did not result in significant macroscopic-scale movements of mass in the environment. Even the tiny displacements of individual particles could well be important-though normally at a somewhat larger total scale of mass than with a macroscopic 'lump' movement.

In order to clarify the effect that a disturbance of this kind might have in the present scheme, let us replace the lump-moving device, in the above idealized experimental situation, by a lump of fluid matter that simply absorbs the photon, if it is transmitted through the mirror (Fig. 6.7), so now the lump itself is playing the role of the 'environment'. Instead of having to consider a linear superposition between two states that are macroscopically distinct from each other, by virtue of one instance of the lump having been moved bodily with respect to the other, we are nowjust concerned with the difference between two configurations of atomic positions, where one configuration of particles is displaced randomly from the other. For a lump of ordinary fluid material of radius a, we may now expect to find a reduction time that is perhaps something of the order of 10^130/a^3 (depending, to some extent, on what assumptions are made) rather than the 10^186/a^3 that was relevant for the collective movement of the lump. This suggests that somewhat larger lumps would be needed in order to effect reduction, than would have been the case for a lump being bodily moved. However, reduction would still occur, according to this scheme, even though there is no macroscopic overall movement. Recall the material obstruction that intercepted the photon beam, in our discussion of quantum interference in 5.8. The mere absorption-or potentiality for absorption-of a photon by such an obstruction would be sufficient to effect R, despite the fact that nothing macroscopic would occur that is actually observable. This also shows how a sufficient disturbance in an environment that is entangled with some system under consideration would itself effect R and so contact is made with more conventional FAPP procedures. Indeed, in almost any practical measuring process it would be very likely that large numbers of microscopic particlcs in the surrounding environment would become disturbed. According to the ideas being put forward here, it would often be this that would be the dominant effect, rather than macroscopic bodily movement of objects, as with the 'lump displacement' initially described above. Unless the experimental situation is very carefully controlled, any macroscopic movement of a sizeable object would disturb large amounts of the surrounding environment, and it is probable that the environment's reduction time-perhaps about 10^130/b^3, where b is the radius of the region of water-density entangled environment under consideration- would dominate (i.e. be much smaller than) the time 10^186/a^5 that might be relevant to the object itself. For example, if the radius b of disturbed environment were as little as about a tenth of a millimetre, then reduction would take place in something of the order of a millionth of a second for that reason alone. Such a picture has a lot in common with the conventional description that was discussed in 6.6, but now we have a definite criterion for R actually to occur in this environment. Recall the objections that were raised in 6.6 to the conventional FAPP picture, as a description of an actual physical reality. With a criterion such as the one being promoted -here, these objections no longer hold. Once there is a sufficient disturbance in the environment, according to the present ideas, reduction will rapidly actually take place in that environment-and it would be accompanied by reduction in any 'measuring apparatus' with which that environment is entangled. Nothing could reverse that reduction and enable the original entangled state to be resurrected, even imagining enormous advances in technology. Accordingly, there is no contradiction with the measuring apparatus actually registering either YES or NO-as in the present picture it would indeed do. I imagine that a description of this nature would be relevant in many biological processes, and it would provide the likely reason that biological structures of a size much smaller than a micron's diameter can, no doubt, often behave as classical objects. A biological system, being very much entangled with its environment in the manner discussed above, would have its own state continually reduced because of the continual reduction of this environment. We may imagine, on the other hand, that for some reason it might be favourable to a biological system that its State remain unreduced for a long time, in appropriate circumstances. in such cases it would be necessary for the system to be, in some way, very effectively insulated from its surroundings. These considerations will be important for us later (7.5). A point that should be emphasized is that the energy that defines the lifetime of the superposed state is an energy difference, and not the total, (mass-) energy that is involved in the situation as a whole. Thus, for a lump that is quite large but does not move very much-and supposing that it is also crystalline, so that its individual atoms do not get randomly displaced- quantum superpositions could be maintained for a long time. The lump could be much larger than the water droplets considered above. There could also be other very much larger masses in the vicinity, provided that they do not get significantly entangled with the superposed state we are concerned with. (These considerations would be important for solid-state devices, such as gravitational wave detectors, that use coherently oscillating solid-perhaps crystalline-bodies. ") So far, the orders of magnitude seem to be quite plausible, but clearly more work is needed to see whether the idea will survive more stringent examination. A crucial test would be to find experimental situations in which standard theory would predict effects dependent upon large-scale qpantum superpositions, but at d level where the present proposals demand that such superpositions cannot be maintained. If the conventional quantum expectations are supported by observation, in such situations, then the ideas that I am promoting would have to be abandoned-or at least severely modified. If observation indicates that the superpositions are not maintained, then that would lend some support to the present ideas. Unfortunately, I am not aware of any practical suggestions for appropriate experiments, as yet. Superconductors, and devices such as SQUIDs (that depend upon the large-scale quantum superpositions that occur with superconductors), would seem to present a promising area of experiment relevant to these issues (see Leggett 1984). However, the ideas I am promoting would need some further development before they could be directly applied in these situations. With superconductors, very little mass displacement occurs between the different superposed states. There is a significant momentum displacement instead, however, and the present ideas would need some further theoretical development in order to cover this situation. The ideas put forward above would need to be reformulated somewhat, even to treat the simple situation of a cloud chamber-where the presence of a charged particle is signalled by the condensation of small droplets out of the surrounding vapour- Suppose that we have a quantum state of a charged particle consisting of a linear superposition of the particle being at some location in the cloud chamber and of the particle being outside the chamber. The part of the particle's state vector that is within the chamber initiates the formation of a droplet, but the part for which the particle is outside does not, so that state now consists of a superposition of two macroscopically different states. In one of these there is a droplet forming from the vapour and in the other, there is just uniform vapour. We need to estimate the gravitational energy involved in pulling the vapour molecules away from their counterparts, in the two states that are being considered in superposition. However, now there is an additional complication because there is also a difference between the gravitational se@-energy of the droplet and of the uncondensed vapour. In order to cover such situations, a different formulation of the above suggested criterion may be appropriate. We can now consider the gravitational self- energy of that mass distribution which is the difference between the mass distributions of the two states that are to be considered in quantum linear superposition. The reciprocal of this self-energy gives an alternative proposal for the reduction timescale. In fact, this alternative formulation gives just the same result as before, in those situations considered earlier, but it gives a somewhat different (more rapid) reduction time in the case of a cloud chamber. Indeed, there are various alternative general schemes for reduction times, giving different answers in certain situations, although agreeing with each other for the simple two-state superposition involving a rigid displacement of a lump, as envisaged at the beginning of this section. The original such scheme is that of Di6si (1989) (which encountered some difficulties, as pointed out by Ghirardi, Grassi, and Rimini (1990), who also suggested a remedy). I shall not distinguish between these various proposals here, which will all come under the heading of the proposal of 6.12' in the following chapters. What are the motivations for the specific proposal for a 'reduction time' that is being put forward here? My own initial motivations were rather too technical for me to describe here and were, in any case, inconclusive and incomplete." I shall present an independent case for this kind of physical scheme in a moment. Though also incomplete as it stands, the argument seems to suggest a powerful underlying consistency requirement that gives additional support for believing that state reduction must ultimately be a gravitational phenomenon of the general nature of the one being proposed here. The problem of energy conservation in the GRW-type schemes was already referred to in 6.9. The 'hits' that particles are involved in (when their wavefunctions get spontaneously multiplied by Gaussians) cause small violations of energy conservation to take place. Moreover, there seems to be a non-local transfer of energy with this kind of process. This would appear to be a characteristic-and apparently unavoidable feature-of theories of this general type, where the R-procedure is taken to be a real physical effect. To my mind, this provides strong additional evidence for -theories in which gravitational effects play a crucial role in the reduction process. For energy conservation in general relativity is a subtle and elusive issue. The gravitational field itself contains energy, and this 'energy measurably contributes to the total energy (and therefore to the mass, by Einstein's E= mc^2) of a system. Yet it is a nebulous energy that inhabits empty space in a mysterious non-local way." Recall, in particular, the mass-energy that is carried away, in the form of gravitational waves, from the binary pulsar system PSR 1913 + 16 (cL 4.5); these waves are ripples in the very structure of empty space. The energy that is contained in the mutual attractive fields of the two neutron stars is also an important ingredient of their dynamics which cannot be ignored. But this kind of energy, residing in empty space, is of an especially slippery kind. It cannot be obtained by the 'adding up' of local contributions of energy density; nor can it even be localized in any particular region of space-time (see ENM, pp. 220-1). It is tempting to relate the equally slippery non-local energy problems of the R-procedure to those of classical gravity, and to offset one against the other so as to provide a coherent overall picture. Do the suggestions that I have been putting forward here achieve this overall coherence? I believe that there is a very good chance that they can be made to, but the precise framework for achieving this is not yet to hand. One can see, however, that there is certainly good scope for this in principle. For, as mentioned earlier, we can think of the reduction process as something rather like the decay of an unstable particle or nucleus. Think of the superposed state of a lump in two different locations as being like an unstable nucleus that decays, after a characteristic 'half-life' timescale, into something else more stable. In the case of the superposed lump locations we likewise think of an unstable quantum state which decays, after a characteristic lifetime (given, roughly on average, by the reciprocal of the gravitational energy of separation), to a state where the lump is in one location or the other- representing two possible decay modes.