Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes live video seminars, enchanting renewal songs and a thousand page illustrated codex.

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Get the Genesis

of Eden AV-CD by secure

internet order >> CLICK_HERE

Windows / Mac Compatible. Includes

live video seminars, enchanting renewal songs and a thousand page

illustrated codex.

Return

to Genesis of Eden?

Return

to Genesis of Eden?

Looking-glass croquet has Alica hitting rolled-up hedgehogs,

each bearing anuncanny resemblance to Werner

Heisenberg towards a wall. Classically the hedgehogs always bounce off.

Quantum-mechanically however a

small probability exists that a hedgehog will appear on the far side. The

puzzle facing quantum-mechanics is

how long does it take to go through the wall? Does the traversal time violate

Einstein's light-speed limit?

Faster than Light

Raymond Chiao, Paul Kwait, Aephraim Steinberg Scientific American Aug 93

For experimentalists studying quantum mechanics, the fantastic often turns into reality. A recent example emerges from the study of a phenomenon known as nonlocality, or "action at a distance.' This concept calls into question one of the most fundamental tenets of modem physics, the proposition that nothing travels faster than the speed of light. An apparent violation of this proposition occurs when a particle at a wall vanishes, orily to reappear-almost instantaneously-on the other side. A reference to Lewis Carroll may help here. When Alice stepped through the looking glass, her movement constituted in some sense action at a distance, or nonlocality: her effortless passage through a solid object was instantaneous. The particle's behavior is equally odd. If we attempted to caladate the particle's average velocity, we would find that it exceeded the speed of h&t. Is this possible? Can one of the most famous laws of modern physics be breached with impunity? Or is there something wrong with our conception of quantum mechanics or with the idea of a 'traversal velocity"? To answer such questions, we and several other workers have recently conducted many optical expexinients to in%,estigate some of the manifestations of quantum nonlocality. In particular we focus on three demonstrations of nonlocal effects. In the first example, we 'race' two photons, one of which must move through a 'wall.' In the second instance, we look at how the race is timed, showing that each photon travels along the two different race paths simultaneously. The final experiment reveals how the simultaneous behavior of photon twins is coupled, even if the twins are so far apart that no signal has time to travel between them.

The distinction between locality and nonlocality is related to the concept of a trajectory. For example, in the classical world a rolling croquet ball has a definite position at every moment. If each moment is captured as a snapshot and the pictures are jomed, they form a smooth unbroken line, or trajectory, from the player's mallet to the hoop. At each point on this trajectory, the croquet ball has a deftnite speed, which is related to its kinetic energy. If it travels on a flat pitch, it rolls to its target. But if the ball begins to roll up a hill, its kinetic energy is converted into potential energy. As a result, it slows-eventually to stop and roll back down. In the jargon of physics such a hill is called a barrier, because the ball does not have enough energy to travel over it, and, classically, it always rolls back. Similarly, if Alice were unable to hit croquet balls (or rolled-up hedgehogs, as Carroll would have them) with enough energy to send them crashing through a brick wall, they would merely bounce off. According to quantum mechanics, this concept of a trajectory is flawed. The position of a quantum mechanical particle, unlike that of a croquet ball, is not described as a precise mathematical point. Rather the particle is best represented as a smeared-out wave packet. This packet can be seen as resmbling the shell of a tortoise, because it rises from its leading edge to a certain height and then slopes down again to its trailing edge. The height of the wave at a given position along this span indicates the probability that the particle occupies that position: the higher a given part of the wave packet, the more likely the particle is located there. The width of the packet from front to back represents the intrinsic uncertainty of the particle's location [see box]. When the particle is detected at one point, however, the entire wave packet disappears. Quantum mechanics does not ten us where the particle has been before this moment. This uncertainty in location leads to one of the most remarkable consequences of quantum mechanics. If the hedgehogs are quantum mechanical, then the uncertainty of position permits the beasts to have a very small but perfectly real chance of appearing on the far side of the wag. TWs process is known as tunneling and plays a major role in science and technology. Tunneling is of central importance in nuclear fusion, certain high-speed electronic devices, the highest-resolution microscopes in existence and some theories of cosmology. In spite of the name 'tunneling" the barrier is intact at all times. In fact, if a particle were inside the barrier, its kinetic energy would be negative. Velocity is proportional to the square root of the kinetic energy, and so in the tunnehng case one must take the square root of a negative number. Hence, it is impossible to ascribe a real velocity to the particle in the barrier. This is why when looking at the watch it has borrowed from the White Rabbit, the hedgehog that has tunneled to the far side of the wall wears-like most physicists since the 1930s-a puzzled expression. What time does the hedgehog see? In other words, how long did it take to tunnel through the barrier? Over the years, many attempts have been niade to answer the question of the tunneling time, but none has been universally accepted. Using photons rather than hedgehogs, our group has recently completed an experiment that provides one concrete defiwtion of this time. Photons are the elementary particles from which all light is made; a typical light bulb emits more than 100 billion such particles in one billionth of a second. Our experiment does not need nearly so many of them To make our measurements, we used a light source that emits a pair of photons simultaneously. Each photon travels toward a different detector. A barrier is placed in the path of one of these photons, whereas the other is allowed to fly unimpeded. Most of the time, the first photon bounces off the barrier and is lost; only its twin is detected. Occasionally, however, the first photon tunnels through the barrier, and both photons reach their respective detectors. In this situation, we can compare their arrival times and thus see how long the tunneling process took. The role of the barrier was played by a common optical element: a mirror. This mirror, however, is unlike the ordinary household variety (which relies on metallic coat and absorbs as much as 15 percent of the incident light). The laboratory mirrors consist of thin, alternating layers of two different types of transparent glass, through which light travels at slightly different speeds. These layers act as periodic 'speed bumps." individually, they would do little more than slow the light down. But when taken together and spaced appropriately, they form a region in which light finds it essentially impossible to travel. A multilayer coating one micron thick-one one-hundredth of the diameter of a typical human hair-reflects 99 percent of incident light at the photon energy (or, equivalently, the color of the light) for which it is designed. Our experiment looks at the remaining 1 percent of the photons, which tunnel through this looking glass.

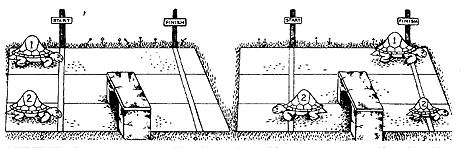

RACING TORTOISES help to characterise time. Each represents

the probability distribution of the position of a photon. The peak Is where

a photon is most likely to be detected. The tortoises start together (left)

Tortoise 2 encounters a barrier and splits in two (right). Because the chance

of of tunneling is low, the transmitted tortoise is small, whereas the reflected

one is nearly as tall as the original. On those rare occasions of of tunneling

the peak of tortoise 2's shell on average crosses the finish line first-implying

an average tunneling velocity of 1.7 the speed of light. But the tunnelling

tortise's nose never travels faster then light-note that both tortises remain

'nose and nose' at the end. Hence, Einstein's law is not violated.

During several days of data collection, more than one million photons tunneled through the barrier, one by one. We compared the arrival times for tunneling photons and for photons that had been traveling unimpeded at the speed of light. (The speed of light is so great that conventional electronics are hundreds of thousands of times too slow to perform the timing; the technique we used will be described later, as a second example of quantum nordocality.) The surprising result: on average, the tunnelling photons arrived before those that traveled through air, implying an average tunneling velocity of about 1.7 times that of light. The result appears to contradict the classical notion of causality, because, according to Einstein's theory of relativity, no signal can travel faster than the speed of light. If signals could move faster, effects could precede causes from the viewpoints of certain observers. For example, a light bulb might begin to glow before the switch was thrown. The situation can be stated more precisely. If at some deftnite time you made a decision to start firing photons at a mirror by opening a starting gate, and someone else sat on the other side of the mirror Iooking for photons, how much time would elapse before the other person knew you had opened the gate? At first, it might seem that since the photon tunnels faster than light she would see the light before a signal traveling at the theoretical speed limit could have reached her, in violation of the Einsteinian view of causality. Such a state of affairs seems to suggest an array of extraordinary, even bizarre communication technologies. Indeed, the implications of faster-than-light influences led some physicists in the early part of the century to propose alternatives to the standard interpretation of quantum mechanics. Is there a quantum mechanical way out of this paradox? Yes, there is, although it deprives us of the exciting possibility of toying with cause and effect. Until now, we have been talking about the tunneling velocity of photons in a classical context, as if it were a directly observable quantity. The Heisenberg uncertainty principle, however, indicates that it is not. The time of emission of a photon is not precisely defined, so neither is its exact location or velocity. In truth, the position of a photon is more correctly described by a bell-shaped probability distribution - a tortoise shell-whose width corresponds to the uncertainty of its location. A relapse into metaphor might help to explain the point. The nose of each tortoise leaves the starting gate the instant of opening. The emergence of the nose marks the earliest time at wwch there is any possibility for obseryft a photon. No signal can ever be received before the nose arrives. But because of the uncertainty of the photon's location, on average a short delay exists before the photon crosses the gate. Most of the tortoise (where the photon is more likely to be detected) trails behind its nose. For simplicity, we label the probability distribution of the photon that travels unimpeded to the detector as 'tortoise l' and that of the photon that tunnels as 'tortoise 2." When tortoise 2 reaches the tunnel barrier, it splits into two smaller tortoises: one that is reflected back toward the start and one that crosses the barrier. These two partial tortoises together represent the probability distribution of a single photon. When the photon is detected at one position, its other partial tortoise instantly disappears. The reflected tortoise is bigger than the tunneling tortoise simply because the chances of reflection are greater than that of transmission (recall that the mirror reflects a photon 99 percent of the time). We observe that the peak of tortoise 2's shell, representing the most likely position of the tunnelft photon, reaches the finish line before the peak of tortoise 1's shell. But tortoise 2's nose arrives no earlier than the nose of tortoise 1. Because the tortoises' noses travel at the speed of light, the photon that signals the opening of the starting gate can never arrive earlier than the time allowed by causality [see illustration]. In a typical experiment, however, the nose represents a region of such low probability that a photon is rarely observed there. The whereabouts of the photon, detected only once, are best predicted by the location of the peak. So even though the tortoises are nose and nose at the finish, the peak of tortoise 2's shell precedes that of tortoise 1's (the transmitted tortoise is smaller than tortoise 1). A photon tunneling through the barrier is therefore most likely to arrive before a photon traveling unimpeded at the speed of light. Our experiment confirmed this prediction. But we do not believe that any individual part of the wave packet moves faster than light. Rather the wave packet gets "reshaped" as it travels, until the peak that emerges consists primarily of what was originally in front. At no point does the tunneling-photon wave packet travel faster than the free-travelling photon. In 1982 Steven Chu of Stanford University and Stephen Wong, then at AT&T Bell Laboratories, observed a similar reshaping effect. They experimented with laser pulses consisting of many photons and found that the few photons that made it through an obsta cle arrived sooner than those that could move freely. One might suppose that only the first few photons of each pulse were 'allowed' through and thus dismiss the reshaping effect. But this interpretation is not possible in our case, because we study one photon at a time. At the moment of detection, the entire photon 'jumps" instantly into the transmitted portion of the wave packet, beating its twin to the finish more than half the time. Although reshaping seems to account for our observations, the question still lingers as to why reshaping should occur in the first place. No one yet has any physical explanation for the rapid tunneling. In fact, the question had puzzled investigators as early as the 1930s, when physicists such as Eugene Wigner of Princeton University had noticed that quantum theory seemed to imply such high tunnelling speeds. Some assumed that approximations used in that prediction must be incorrect, whereas others held that the theory was conect but required cautious interpretation. Some researchers, in particular Markus Battiker and Rolf Landauer of the IBM Thomas J. Watson Research Center, suggest that quantities other than the arrival time of the wave packet's peak (for example, the angle through which a "spinning" particle rotates while tunneling) might be more appropriate for describing the time "spent" inside the barrier..ALIthough quantum mechanics can predict a particle's average arrival time, it lacks the classical notion of trajectories, without ,A,hich the meaning of time spent in a region is unclear. One hint to explain fast tunneling stems from a peculiar characteristic of the phenomenon. According to theory, an increase in the width of the barrier does not lengthen the time needed by the wave packet to tunnel through. This observation can be roughly understood using the uncertainty principle. Specifically, the less time we spend studying a photon, the less certain we can be of its energy. Even if a photon fired at a barrier does not have enough energy to cross it, in some sense a short period initially exists during which the particle's energy is uncertain. During this time, it is as though the photon could temporarily borrow enough extra energy to make it across the barrier. - tbe length of this grace period depends only on the borrowed energy, not on the width of the barrier. No matter how wide the barrier becomes, the transit time across it remains the same. For a sufficiently wide barrier, the apparent traversal speed would exceed the speed of light.

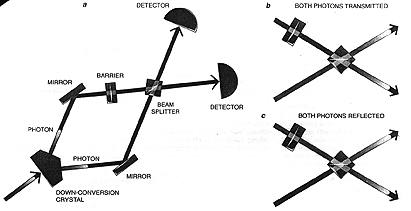

Obviously, for our measurements to be meaningful our tortoises had to run exactly the same distance. In essence, we had to straighten the racetrack so that neither tortoise had the advantage of the inside lane. Then when we placed a barrier in one path, any delay or acceleration would be attributed solely to quantum tunneling. One way to set up two equal lanes would be to determine how much time it takes for a photon to travel from the source to the detector for each path. Once the times were equal, we would know the paths were also equal. But performing such a measurement with a conventional stopwatch would require one whose hands went around nearly a billion bilhon times per minute. Fortunately, Leonard Mandel and his co-workers at the University of Rochester have developed an interference technique that can time our photons. Mandel's quantum stopwatch rehes on an optical element called a beam splitter [see illustration]. Such a device transmits half the photons striking it and reflects the other half. The racetrack is set up so that two photon wave packets are released at the same time from the starting gate and approach the beam splitter from opposite sides. For each pair of photons, there are four possibilities: both photons might pass through the beam splitter; both might rebound from the beam splitter; both could go off together to one side; or both could go off together to the other side. The first two possibilities-that both photons are transmitted or both reflected-result in what are termed coincidence detections. Each photon reaches a different detector (placed on either side of the beam splitter), and both detectors are triggered within a billionth of a second of each other. Unfortunately, this time resolution is about how long the photons take to run the entire race and hence is much too coarse to be useful. So how do the beam splitter and the detectors help in the setup of the racetrack? We simply tinker with the length of one of the paths until all coincidence detections disappear. By doing so, we make the photons reach the beam splitter at the same time, effectively rendering the two racing lanes equal. Admittedly, the proposition sounds peculiar - after all, equal path lengths would seem to imply coincident arrivals at the two detectors. Why would the absence of such events be the desired signal? The reason lies in the way quantum mechanical particles interact with one another. All particles in nature are either bosons or fermions. Identical ferimions (electrons, for example) obey the Pauli exclusion principle, which prevents any two of them from ever being in the same place at the same time. In contrast, bosons (such as photons) like being together. Thus after reaching the beam splitter at the same time, the two photons prefer to head in the same direction. This preference leads to the detection of fewer coincidences (none, in an ideal experiment) than would be the case if the photons acted independently or arrived at the beam splitter at different times. Therefore, to make sure the photons are in a fair race, we adjust one of the path lengths. As we do this, the rate of coincident detections goes through a dip whose minimum occurs when the photons take exactly the same amount of time to reach the beam splitter. The width of the dip (which is the limiting factor in the resolution of our experiments) corresponds to the size of the photon wave packets-typically, about the distance light moves in a few hundredths of a trillionth of a second. Only when we knew that the two path lengths were equal did we install the barrier and begin the race. We then found that the coincidence rates were no longer at a minimum, implying that one of the photons was reaching the beam splitter first. To restore the minimum, we had to lengthen the path taken by the tunneling photon. This correction indicates that photons take less time to cross a barrier than to travel in air.

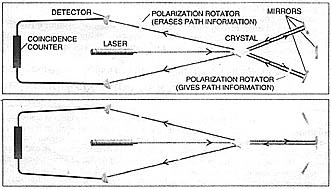

TWIN-PHOTON INTERFEROMETER (a) precisely times racing photons.

The photons are born in a down-conversion crystal and are directed by mirrors

to a beam splitter. If one photon beats the other to the beam splitter (because

of the barrier), both detectors will be triggered in about half the races.

Two possibilties lead to such coincidence detections: both photons are transmitted

by the beam splitter (b), or both are reflected (c). Aside from their arrival

times there is no way of determining which photon took which route, either

could have traversed the barrier. (This non-locality actually sustains the

performance of the interferometer.) if both photons reach the beam splitter

simultaneously, for quantum reasons they will head in the same direction,

so that both detectors do not go off. The two possibilities shown are then

said to interfere destructively.

Even though investigators designed racetracks for photons and a clever timekeeping device for the race, the competition still should have been difficult to conduct. The fact that the test could be carried out at all constitutes a second validation of the principle of nonlocality, if not for which precise timing of the race would have been impossible. To detemiine the emission time of a photon most precisely, one would obviously like the photon wave packets to be as short as possible. The uncertainty principle, however, states that the more accurately one determines the emission time of a photon, the more uncertainty one has to accept in knowing its energy, or color. Because of the uncertainty principle, a fundamental trade-off should emerge in our experiments. The colors that make up a photon win disperse in any kind of glass, widening the wave packet and reducing the precision of the timing. Dispersion arises from the fact that different colors travel at various speeds in glass-blue light generally moves more slowly than red. A familiar example of dispersion is the splitting of white light into its constituent colors by a prism. As a short pulse of light travels through a dispersive medium (the barrier itself or one of the glass elements used to steer the light), it spreads out into a 'chirped' pulse: the redder part pulls ahead, and the bluer hues lag behind [see illustration]. A simple calculation shows that the width of our photon pulses would quadruple on passage through an inch of glass. The presence of such broadening should have made it well nigh impossible to tell which tortoise crossed the finish line first. Remarkably, the widening of the photon pulse did not degrade the precision of our timing. Herein lies our second example of quantum nonlocality. Essentially both twin photons must be travelling both paths simultaneously. Almost magically, potential timing errors cancel out as a result. To understand this cancellation effect, we need to examine a special property of our photon pairs. The pairs are bom in what physicists call 'spontaneous parametric down-conversion.' The process occurs when a photon travels into a crystal that has nonlinear optical properties. Such a crystal can absorb a single photon and emit a pair of other photons, each with about half the energy of the parent, in its place (this is the meaning of the phrase 'down-conversion"). An ultraviolet photon, for instance, would produce two infrared ones. The two photons are emitted simultaneously, and the sum of their energies exactly equals the energy of the parent photon. In other words, the colors of the photon pairs are correlatedif one is slightly bluer (and thus travels more slowly in glass), then the other must be slightly redder (and must travel more quickly). One might think that differences between siblings niight affect the outcome of the race-one tortoise might be more athletic than the other. Yet because of nonlocahty, any discrepancy between the pair proves irrelevant. The key point is that neither detector has any way of identifying which of the photons took which path. Either photon might have passed through the barrier. Having two or more co-existing possibilities that lead to the same final outcome results in what is termed an interference effect. Here each photon takes both paths simultaneously, and these two possibilities interfere with each other. That is, the possibility that the photon that went through the glass was the redder (faster) one interferes with the possibility that it was the bluer (slower) one. As a result, the speed differences balance, and the effects of dispersion cancel out. The dispersive widening of the individual photon pulses is no longer a factor. If nature acted locally, we would have been hardpressed to conduct any measurements. The only way to desciribe what happens is to say that each twin travels through both the path with the barrier and the free path, a situation that exemplifies nonlocality.

Thus far we have discussed two nonlocal results from our quantum experiments. The first is the measurement of tunnelling time, which requires two photons to start a race at exactly the same time. The second is the dispersion cancellation effect, which relies on a precise correlation of the racing photons' energies. In other words, the photons are said to be correlated in energy (what they do) and time (when they do it). Our final example of nonlocahty is effectively a combination of the first two. Specifically, one photon 'reacts' to what its twin does instantaneously, no matter how far apart they are. Knowledgeable readers may protest at this point, claiming that the Heisenberg uncertainty principle forbids precise specification of both time and energy. And they would be right, for a single particle. For two particles, however, quantum mechanics allows us to define simultaneously the difference between their emission times and the sum of their energies, even though neither particle's time or energy is specified. This fact led Einstein, Boris Podolsky and Nathan Rosen to conclude that quantum mechanics is an incomplete theory. In 1935 they formulated a thought experiment to demonstrate what they believed to be the shortcomings of quantum mechanics. If one believes quantum mechanics, the dissenting physicists pointed out, then any two particles produced by a process such as down-conversion are coupled. For example, suppose we measure the time of emission of one particle. Because of the tight time correlation between them, we could predict with certainty the emission time of the other particle, without ever disturbing it. We could also measure directly the energy of the second particle and then infer the energy of the first partideSomehow we would have managed to determine precisely both the energy and the time of each particle-in effect, beating the uncertainty principle. How can we understand the correlations and resolve this paradox? There are basically two options. The first is that there exists what Einstein called 'spooklike actions at a distance' (spukhafte Fernwirkangen). In this scenario, the quantum mechanical description of particles is the whole story. No particular time or energy is associated with any photon until, for example, an energy measurement is made. At that point, only one energy is observed. Because the energies of the two photons sum to the definite energy of the parent photon, the previously undetermined energy of the twin photon which we did not measure, must instantaneously jump to the value demanded by conservation. This nonlocal 'collapse' would occur no matter how far away the second photon had traveled. The uncertainty principle is not violated, because we can specify only one variable or the other: the energy measurement disrupts the system, instantaneously introducing a new uncertainty in the time. Of course, such a crazy, nonlocal model should not be accepted if a simpler way exists to understand the correlations. A more intuitive explanation is that the twin photons leave the source at demte, correlated times, carrying definite, correlated energies. The fact that quantum mechanics cannot specify these properties simultaneously would merely indicate that the theory is incomplete. Einstein, Podolsky and Rosen advocated the latter explanation. To them, there was nothing at all nonlocal in the observed correlations between particle pairs, because the properties of each particle are determined at the moment of emission. Quantum mechanics was orily correct as a probabilistic theory, a kind of photon sociology, and could not completely describe all individual particles. One might imagine that there exists an underlying theory that could predict the specific results of all possible measurements and show that particles act locally. Such a theory would be based on some hidden variable yet to be discovered. In 1964 John S. Bell of CERN, the European laboratory for partide physics near Geneva, established a theorem showing that an invocations of local, hidden variables give predictions different from those stated by quantum mechanics.

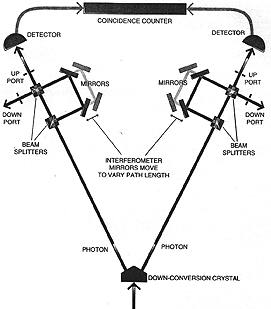

Since then, experimental results have supported the nonlocal (quantum mechanical) picture and contradicted the intuitive one of Einstein Podolsky and Rosen. Much of the credit for the pioneering work belongs to the groups led by John Clauser of the University of Califonlia at Berkeley and Alain Aspect, now at the Institute of Optics in Orsay. In the 1970s and early 1980s they examined the correlations between polarizations in photons. The more recent work of John G. Rarity and Paul R. Tapster of the Royal Signals and Radar Establishment in England explored correlations between the momentum of twin photons. Our group has taken the tests one step further. Following an idea proposed by James D. Franson of Johns Hopkins University in 1989, we have performed an experiment to determine whether some local hidden variable model, rather than quantum mechanics, can account for the energy and time correlations. In our experiment photon twins from our down-conversion crystal are separately sent to identical interferometers [see illustration]. Each interferometer is designed much like an interstate highway with an optional detour. A photon can take a short path, going directly from its source to its destination. Or it can take the longer, detour path (whose length we can adjust) by detouring through the rest station before continuing on its way. Now watch what happens when we send the members of a pair of photons through these interferometers. Each photon wfll randomly choose the long route (through the detour) or the shorter the route. After following one of the two paths, a photon can leave its interferometer through either of two ports, one labeled 'up' and the other down. We observed that each particle was as likely to leave through the up port as it was through the down. Thus, one might intuitively presume that the photon's choice of one exit would be unrelated to the exit choice its twin makes in the other interferometer. Wrong. Instead we see strong correlations between which way each photon goes when it leaves its interferometer. For certain detour lengths, for example, whenever the photon on the left leaves at the up exit, its twin on the right flies through its own up exit. One might suspect that this correlation is built in from the start, as when one hides a white pawn in one fist and a black pawn in the other. Because their colors are well defined at the outset we are not surprised that the instant we find a white pawn in one hand, we know with a certainty that the other must be black. But a built-in correlation cannot account for the actual case in our expeximent, which is much stranger: by changing the path length in either interferometer, we can control the nature of the correlations. We can go smoothly from a situation where the photons always exit the corresponding ports (both use the up port, or both use the down port) of their respective interferometers to one in which they always at opposite ports. In principle, such a correlation would exist even if we adjusted the path length after the photons had left the source. In other words, before entering the interferometer, neither photon knows which way it is going to have to go-but on leaving, each one knows instantly (nonlocally) what its twin has done and behaves accordingly. To analyze these correlations, we look at how often the photons emerge from each interferometer at the same time and yield a coincidence count between detectors placed at the up exit ports of the two interferometers. Varying either of the long-arm path lengths dges not change the rate of detections at either detector individually. It does, however, affect the rate of coincidence counts, indicating the conelated behavior of each photon pair. This variation produces 'fringes' reminiscent of the light and dark stripes in the traditional two-slit interferometer showing the wave nature of particles.

In our experiment, the fringes imply a peculiar interference effect. As alluded to earlier, interference can be expressed as the result of two or more indistinguishable, coexisting possibilities leading to the same final outcome (recall our second example of nonlocality, in which each photon travels along two different paths simultaneously, produdng interference). In the present case. there are two possible ways for a coincidence count to occur: either both photons had to travel the short paths, or both photons had to travel the long paths. (In the cases in which one photon travels a short path and the other a long path they arrive at different times and so do not interfere with each other; we discard these counts electronically.)

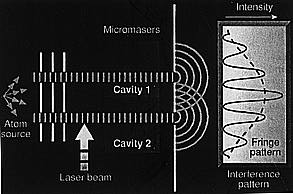

NONLOCAL CORRELATION be two particles is demonstrated

in the Franson experiment which sends two photons to separate but identical

interferometer. Each photon may take a short route or a longer 'detour'

at the first beam splitter They may leave through the upper or lower exit

ports. A detector looks at the photons leaving the upper exit ports. Before

entering its interferometer, neither photon knows which way it will go.

After leaving, each knows instantly and nonlocally what its twn has done

and so behaves accordingly.

NONLOCAL CORRELATION be two particles is demonstrated

in the Franson experiment which sends two photons to separate but identical

interferometer. Each photon may take a short route or a longer 'detour'

at the first beam splitter They may leave through the upper or lower exit

ports. A detector looks at the photons leaving the upper exit ports. Before

entering its interferometer, neither photon knows which way it will go.

After leaving, each knows instantly and nonlocally what its twn has done

and so behaves accordingly.

The coexistence of these two possibihties suggests a classically nonsensical picture. Because each photon arrives at the detector at the same time after having traveled both the long and short routes, each photon was emitted 'twice"-once for the short path and once for the long path. To see this, consider the analogy in which you play the role of one of the detectors. You receive a letter from a friend on another continent. You know the letter arrived via either an airplane or a boat, implying that it was mailed a week ago (by plane) or a month ago (by boat). For an interference effect to exist, the one letter had to have been mailed at both times. Classically, of course, this possibility is absurd. But in our experiments the observation of interference fringes implies that each of the twin photons possessed two indistinguishable times of emission from the crystal. Each photon has two birthdays. More important, the exact form of the interference fringes can be used to differentiate between quantum mechanics and any conceivable local hidden variable theory (in which, for example, each photon might be born with a definite energy or already knowing which exit port to take). According to the constraints derived by Bell, no Wdden variable theory can predict sinusoidal fringes that exhibit a 'contrast' of greater than 71 percent-that is, the difference in intensity between light and dark stripes has a specific limit. Our data, however, display fringes that have a contrast of about 90 percent. If certain reasonable supplementary assumptions are made, one can conclude from these data that the intuitive, local, realistic picture suggested by Einstein and his cohorts is wrong: it is impossible to explain the observed results without acknowledging that the outcome of a measurement on the one side depends nonlocafly on the result of a measurement on the other side.

So is Einstein's theory of relativity in danger? Astonishingly, no, because there is no way to use the correlations between particles to send a signal faster than light. The reason is that whether each photon reaches its detector or instead uses the down exit port is a random result. Only by comparing the apparently random records of counts at the two detectors, necessarily bringing our data together, can we notice the nowocal correlations. The principles of causality remain inviolate.

Science-fiction buffs may be saddened to learn that faster-than-hght communication still seems impossible. But several scientists have tried to make the best of the situation. They propose to use the randomness of the correlations for various cipher schemes. Codes produced by such quantum cryptography systems would be absolutely unbreakable [see "Quantum Cryptography," by Charles H. Bennett, G Brassard and Artur D. Ekert; Scientific American, October 1992]. We have thus seen nonlocality in three different instances. First, in the process of tunneling, a photon is able to somehow sense the far side of a barrier and cross it in the same amount of time no matter how thick the barrier may be. Second, in the high-resolution timing experiments, the cancellation of dispersion depends on each of the two photons having traveled both paths in the interferometer. Finally, in the last experiment discussed, a nonlocal correlation of the energy and time between two photons is evidenced by the photons' coupled behavior after leaving the interferometers. Although in our experiments the photons were separated by only a few feet, quantam mechanics predicts that the correlations would have been observed no matter how far apart the two interferometers were. Somehow nature has been clever enough to avoid any contradiction with the notion of causality. For in no way is it possible to use any of the above effects to send signals faster than the speed of light. The tenuous coexistence of relativity, which is local, and quantum mechanics, which is norilocal, has weathered yet another storm.

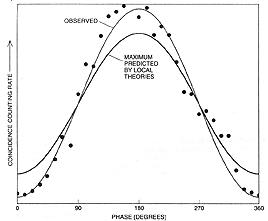

RATE OF COINCIDENCES between left and right detectors in the

Franson experiment (red dots,

with best-fit line) strongly suggests nonlocality. The horizontal axis represents

the sum of the two

long path lengths, in angular units known as phases. The 'contrast,' or

the degree of variation in these rates,

exceeds the maximum allowed by local, realistic theories (blue line), implying

that the correlations

must be nonlocal, as shown by John S. Bell of CERN.

Quantum Seeing in the Dark

Paul Kwait, Harald Weinfurter, Anton Zeilinger Scientific American Nov 96

In Greek mythology, the hero Perseus is faced with the unenviable task of fighting the dreaded Medusa. The snake-haired beast is so hideous that a mere glimpse of her immediately turns any urducky observer to stone. In one version of the story, Perseus avoids this fate by cleverly using his shield to reflect Medusa's image back to the creature herself, turning her to stone. But what if Perseus did not have well-polished armor? He presumably would have been doomed. If he closed his eves, he would have been unable to find his target. And the smallest peek would have allowed some bit of light striking Medusa to reflect into his eye; having thus "seen' the monster, he would have been finished. In the world of physics, this predicament might be sununed up by a seemingly innocuous, almost obvious claim made in 1962 by Nobelist Dennis Gabor, who invented holography. Gabor asserted, in essence, that no observation can be made with less than one photon-the basic particle, or quantum, of light-striking the observed object. In the past several years, however, physicists in the increasingly bizarre field of quantum optics have learned that not only is this claim far from obvious, it is, in fact, incorrect. For we now know how to determine the presence of an object with essentially no photons having touched it. Such interaction-free measurement seems to be a contradiction-if there is no interaction, how can there be a measurement? That is a reasonable conundrum in classical mechanics, the field of physics describing the motions of footballs, planets and other objects that are not too small. But quantum mechanics the science of electrons, photons and other particles in the atomic realm-says otherwise. Interaction-free measurements can indeed be achieved by quantum mechanics and clever experimental designs. If Perseus had been armed with a knowIedge of quantum physics, he could have devised a way to 'see' Medusa without any light actually striking the Gorgon and entering his eye. He could have looked without looking. Such quantum prestidigitation offers many ideas for building detection devices that could have use in the real world. Perhaps even more interesting are the mind-boggling philosophical implications. Those applications and implications are best understood at the level of thought experiments: streamlined analyses that contain all the essential features of real experiments but without the practical complications. So, as a thought experiment, consider a variation of a shell game, which employs two shells and a pebble hidden under one of them. The pebble, however, is special: it will turn to dust if exposed to any light. The player attempts to determine where the hidden pebble is but without exposing it to light or disturbing it in anv way. If the pebble turns to dust, the plaver loses the game. Initially, this task may seem impossible, but we quickly see that as long as the player is willing to be successful half the tune, then an easy strategy is to lift the shell he hopes does not contain the pebble. If he is right, then he knows the pebble lies under the other shell, even thoigh he has not seen it. Winning with this strategy, of course, amounts to nothing more than a luckv guess. Next, we take our modification one step further, seemingly simplifing the game but in actuality making it impossible for a player limited to the realm of classical physics to win. We have only one shell, and a random chance that a pebble may or may not be under it. The player's goal is to say if a pebble is present, again .without exposing it to light. Assume there is a pebble under the shell. If the player does not look under the shell, then he gains no information. If he looks, then he knows the pebble was there, except that he has necessariIy exposed it to light and so finds oniv a pile of dust. The player may try to dim the light so there is very little chance of it hitting the pebble. For the player to see the pebble however at least one photon must have hit it, by definition implying he has lost.

To make the game more dramatic, Avshalom C. Elitzur and Lev Vaidman, two physicists at Tel Aviv University, considered the pebble to be a "superbomb" that would explode if just a single photon hit it. The problem then became: determine if a pebble bomb sits under a shell, but don't set it off. Elitzur and Vaidman were the first researchers to offer any solution to the problem. Their answer works, at best, half the time. Nevertheless, it was essential for demonstrating any hope at all of winning the game. Their method exploits the fundamental nature of light. NVe have already mentioned that light consists of photons, calling to mind a particielike quality. But light can displav distinctly wavelike characteristics-notablv a phenomenon called interference. Interference is the way two waves add up with each other. For example, in the well-knowm double-slit experiment, light is directed through two slits, one above the other, to a faraway screen. The screen then display bright and dark fringes. The bright fringes correspond to places where the crests and troughs of the light waves from one slit add constructively to the crests and troughs of waves from the other slit. The dark bands correspond to destructive interference, where the crests from one slit cancel the troughs from the other. Another way of expressing this concept is to say that the bright fringes correspond to areas on the screen that have a high probability of photon hits, and the dark fringes to a low-probability of hits. According to the rules of quantum mechanics, interference occurs whenever there is more than one possible way for a given outcome to happen, and the ways are not distinguishable by any means (this is a more general definition of interference than is often given in textbooks). in the double-slit experiment, light can reach the screen in two possible ways (from the upper or the lower slit), and no effort is made to determine which photons pass through which slit. If we somehow could determine which slit a photon passed through, there would be no interference, and the photon could end up anywhere on the screen. As a result, no fringe pattern would emerge. Simply put, without two indistinguishable paths, interference cannot occur. As the initial setup for their hypothetical measuring system, Elitzur and Vaidman start with an interferometer-a device consisting of two mirrors and two beam splitters. Light entering the interferometer hits a beam splitter, which sends the light along two optical paths: an upper and a lower one. The paths recombine at the second beam splitter, which sends the light to one of two photon detectors [see illustration at leftl. Thus, the interferometer gives each photon two possible paths between the light source and a detector. if the lengths of both paths through the interferometer are adjusted to be exactly equal, the setup effectively becomes the double-slit experiment. The main difference is that the photon detectors take the place of the screen that shows bright and dark fringes. One detector is positioned so that it will detect only the equivalent of the bright fringes of an interference pattern (call that detector Dlight). The other one records the dark fringes-in other words, no photon ever reaches it (call that detector D-dark).

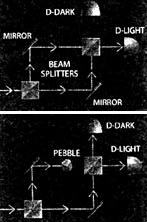

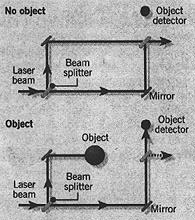

ELITZUR-VAIDMAN EXPERIMENT gives a photon a choice of

two paths to follow. The optical elements are arranged (top) so that photons

always go to detector D-light (corresponding to constructive interference)

but never to D-dark (corresponding to destructive interference). The presence

of a pebble in one path, however, occasionally sends a photon to D-dark

(bottom), indicating that an interaction-free measurement has occurred.

ELITZUR-VAIDMAN EXPERIMENT gives a photon a choice of

two paths to follow. The optical elements are arranged (top) so that photons

always go to detector D-light (corresponding to constructive interference)

but never to D-dark (corresponding to destructive interference). The presence

of a pebble in one path, however, occasionally sends a photon to D-dark

(bottom), indicating that an interaction-free measurement has occurred.

DEMONSTRATION of the Elitzur-Vaidman scheme uses light from a down-conversion crystal, which enters a beam splitter, bounces off two mirrors and interferes with itself back at the beam splitter (top). No light reaches D-dark (corresponding to destructive interference; constructive interference is in the direction from which the photon first came). if a mirror 'pebble' is inserted into a light path, no interference occurs at the beam splitter; D-dark sometimes receives photons (bottom).

Pebble in the Path

What happens if a pebble is placed into-one of the paths, say, the upper one? Assuming that the first beam-splitter acts randomly, then with 50 percent likelihood, the photon takes the upper path, hits the pebble (or explodes the superbomb) and never gets to the is second beam splitter. if the photon takes the lower path, it does not hit the pebble. Moreover, in terference no longer occurs at the second beam splitter, for the photon has only one way to reach it. Therefore, the photon makes another random choice at the second beam splitter. It may be reflected and hit detector Delight; this outcome gives no information, because it would have happened anvway if the pebble had not been there. But the photon may also go to detector D-dark. If that occurs, we know with certainty that there was an object in one path of the interferometer, for if there were not, detector D-dark could not have fired. And because we sent only a single photon, and it showed up at D-dark, it could not have touched the pebble. Somehow we have managed to make an interaction-free measurement-we have determined the presence of the pebble without interacting with it. Although the scheme works only some of the time, we emphasize here that when the scheme -works, it works completely The underlying quantum-mechanical magic in this feat is that everything, including light, has a dual nature-both particle and wave. When the interferometer is empty the light behaves as a wave. It can reach the detectors along both paths simultaneously, which leads to interference. When the pebble is in place, the light behaves as an indivisible particle and follows only one of the paths. The mere presence of the pebble removes the possibility of interference, even though the photon need not have interacted with it. To demonstrate Elitzur and Vaidman's idea, we and Thomas Herzog, now at the University of Geneva, performed a real version of their thought experiment two years ago and thus dem onstrated that interaction-free devices can be built. The-source of single photons was a special nontinear optical crystal. When ultraviolet photons from a laser were directed through the crystal, sometimes then, were "down-converted" into two daughter photons of lower energy that traveled off at about 30 degrees from each other. By detecting one of these photons, we were absolutelv certain of the existence of its sister, which we then directed into our experiment. That photon went into an interferometer (for simplicity, we used a slightly different type of interferometer than the one Elitzur and Vaidman proposed). The mirrors and beam splitter were aligned so that nearly all the photons left by the same way they came in (the analogue of going to detector D-light in the Elitzur-Vaidman example or, in the double-slit experiment, of going to a bright fringe). in the absence of the pebble. the chance of a photon going to detector D-dark was very small because of destructive interference - the analogue of the dark fringes in the double-slit experiment) [see illustration]. But introducing a pebble into one of the pathways changed the odds. The pebble was a small mirror that directed the light path to another detector D-pebble). We then found that about half of the time, D-pebble registered the photon, whereas about one fourth of the time D-dark did (the rest of the time the photon left the interferometer the same way it came in, giving no information). The firing of D-dark was the interaction-free detection of the pebble. In a simple extension of the scheme, we reduced the reflectivity of the beam splitter, which lessened the chance that the photons would be reflected onto the path containing the mirror to D-pebble. What we found, in agreement with theoretical prediction, was that the probabilities of the photons going to D-pebble and going to D-dark became more and more equal. That is, by using a bareIy reflective beam splitter, up to half the measurements in the Elirzur-Vaidman scheme can be made interaction-free (instances in which the photons leave the interferometer the same way they came in are not counted as measurements).

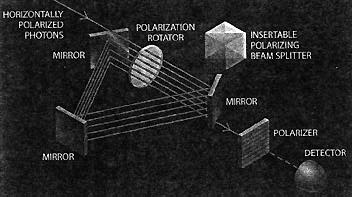

QUANTUM ZENO EFFECT can be demonstrated with devices that

rotate polarization 15 degrees.

After passing through six such rotators, the photon changes from a horizontal

polarization to a

vertical one and so is absorbed by the polarizer (top row). Interspersing

a polarizer after each rotator,

however, keeps the polarization from turning (bottom row).

The Quantum Zeno Effect

The question immediately arose: Is 50 percent the best we can do? Considerable, often heated, argument ensued among us, for no design change that would improve the odds was evident. In January 1994, however, Mark A. Kasevich of Stanford Universiry came to visit us at Innsbruck for a month, and during this stav he put us on to a solution that, if realized, makes it possible to detect objects in an interaction-free way almost every time. It was not the first instance, and hopefully not the last, in which quantum optimism triumphed over quantum pessimism. The new technique is more or less an application of another strange quantum phenomenon, first discussed in detail in 1971 b), Baidyanath Misra, now at the University of Brussels, and E. C. George Sudarshan of the Universiry of Texas at Austin. Basically, a quantum system can be trapped in its initial state. even though it would evolve to some other state if left on its own. The possibility arises be cause of the unusual effect that measurements can have on quantum systems. The phenomenon is called the quantum Zeno effect, because it resembles the famous paradox raised by the Greek philosopher Zeno, who denied the possibility of motion to an arrow in flight because it appears "frozen" at each instant of its flight. It is also known as the watched-pot effect, a reference to the aphorism about boiling water. We all know that the mere act of watching the pot should not (and does not) have any effect on the time it takes to boil the water. In quantum mechanics, however, such an effect actually exists-the measurement affects the outcome (the principle is called the projection postulate). Kasevich essentially reinvented the simplest example of this effect, which was first devised in 1980 by Asher Peres of the Technion-Israel Institute of Technology. The example exploits yet another characteristic of light: polarization. Polarization is the direction in which light waves oscillate-up and down for vertically polarized light, side to side for horizontally polarized light. These oscillations are at right angles to the hght's direction of propagation. Light from the sun and other typical sources generally vibrates in all directions, but here we are concerned mostly with vertical and horizontal polarizations. Consider a photon directed through a series of, say, six devices that each slightly rotates the polarization of light so that a horizontally polarized photon ends up vertically polarized [see illustration above]. These rotators might be glass cells containing sugar water, for example. At the end of the journey through the rotators, the photon comes to a polarizes a device that transmits photons with one kind of polarization but absorbs photons with perpendicular polarization. In this thought experiment, the polarizer transmits oniv horizontally polarized light to a detector. We will start with a photon horizontally polarized, and each rotator will rurn the polarization by 15 degrees. lt is clear, then, that the photon will never get to the detector, for after passing through all the cells, its polarization will have turned 90 degrees (15 degrees for each of the six rotators) so that it becomes vertical. The polarizer absorbs the photon. This stepwise rotation of the polarnation is the quantum evolution that we wish to inhibit. interspersing a horizontal polarizer between each polarization rotator does the trick. Here's why: After the first rotator. the light is not too much turned from the horizontal. This means that the chance that the photon is absorbed in the first horizontal polarizer is quite small. only 6.7 percent. Mathematically it is given by the square of the sine ot the turning angle.) If the photon is not absorbed in the first polarizer, it is again in a state of horizontal polarization-it must be, because that is the only possible state for light that has passed a horizontal polarizer. At the second rotator, the polarization is once again turned 15 degrees from the horizontal, and at the second polarizer, it has the same small chance of being absorbed; otherwise, it is again transmitted in a state of horizontal polarization. The process repeats until the photon comes to the final polarizer. An incident photon has a two-thirds chance of being transmitted through all six inserted polarizers and making it to the detector; the probability is given bv the relation (Cos^2(15 degrees))^6. Yet as we increase the number of stages, decreasing the polarization-rotation angle at each state accordingly (that is, 90 degrees divided by the number of stages), the probability, of transmitting the photon increases. For 20 stages, the probability that the photon reaches the detector is nearly 90 percent. If we could make a system with 2,500 stages, the probability of the photon being absorbed by one of the polarizers would be just one in 1,000. And if it were possible to have an infinite number of stages, the photon would always get through. Thus, we would have completely inhibited the evolution of the rotation. To realize the quantum Zeno effect, we used the same nonlinear crystal as before to prepare a single photon. In stead of using six rotators and six polarizers, we used just one of each; to achieve the same effect, we forced the photon through them six times, employing three mirrors as a kind of spiral staircase [see illustration]. In the absence of the polarizer, the photon exiting the staircase is always found to be vertically polarized. When the polarizer is present, we found that the photon was horizontally polarized (unless the polarizer blocked it). These cases occurred roughly two thirds of the time for our six-cycle experiment, as expected from our thought-experiment analysis. Next we set out to make an interaction-free measurement-that is, to detect an opaque object without any photons hitting it in a highly efficient manner. We devised a system that was somewhat of a hybrid between the Zeno example and the original Elitzur-Vaidman method. A horizontally poIarized photon is let into the system and makes a few cycles (say six again) before leaving. (For this purpose, one needs a mirror that can be "switched" on and off very quickly Fortunately such mirrors, which are actually switchable interference devices, have already been developed for pulsed lasers.) At one end of the system is a polarization rotator, which turns the photons polarization by 15 degrees in each cycle. The other end contains a polarization interferometer. It consists of a polarizing beam splitter and two equal length interferometer paths with mirrors at the ends [see illiistration]. At the polarizing beam splitter, all horizontally polarized light is transmitted, and all verrically polarized light is reflected; in essence, the transmission and reflection choices are analogous to the two paths in the double-slit experiment. In the absence of an object in the polarization interferometer, light is split at the beam splitter according to its polarization, reflects off the mirrors in each path and is recombined by the beam splitter. As a result, the photon is in exactly the same state as before it entered the interferometer (that is, with a polarization turned 15 degrees toward the vertical). So, after six cycles, the polarization ends up rotated to vertical. The situation changes when an opaque object is placed in the vertical polarization path of the interferometer. This situation is analogous to having the six polarizers inserted in the quantum Zeno effect experiment. So in the first cycle, the chance that the photon-the polarization of which has been turned only 15 degrees from horizontal-enters the vertical-polarization path (and is then absorbed by the object) is very small (6.7 percent, as in the Zeno thought experiment). If this absorption does not happen, the photon must have entered the horizontal path instead, and its polarization is reset to be purely horizontal just as in the Zeno example, the whole process repeats at each cycle, unfit finally, after six cycles, ihe bortom mirror is switched off, and the photon leaves the system. Measuring the photon's polarization, we find it still to be horizontal, implying that a blocker must reside in the interferomerer. Otherwise, the photon would have been vertically polarized when it left. And by using more cycles, we can make the probability that the photon is absorbed by the object as small as we like. Preliminary results from new experiments at Los Alamos National Laboratory have demonstrated that up to 70 percent of measurements could be interaction-free. We soon hope to increase that figure to 85 percent.

EXPERIMENTAL REALIZATION of the quantum Zeno effect was accomplished

by making the

photon follow a spiral-staircase path, so that it traversed the polarization

rotator six times.

Inserting a polarizer next to the rotator suppressed the rotation of the

photon's polarization.

Applying Quantum Magic

What good is all this quantum conjuring? We feel that the situation resembles that of the early years of the laser, when scientists knew it to be an ideal solution to many unknown prob lems. The new method of interaction free measurement could be used, for in stance, as a rather unusual means of photograph) in which an object is imaged without being exposed to light. The "photography" process would work in the following way: Instead of sending in one photon, we would send in many photons, one per pixel, and perform interaction-free measurements with them. In those regions where the object did not block the light path of the interferometer, the horizontal polar ization of the photons would undergo the expected stepwise rotation to verti cal. In those regions where the object blocked the light path, a few of the photons would be absorbed; the rest would have their polarizations trapped in the horizontal state. Finally we would take a picture of the photons through a polarizing filter after they had made the requisite number of cycles. If the filter were horizontally aligned, we would obtain an image of the object; if vertically aligned, we would obtain the negative. In any case, the picture is made by photons that have never touched the object. These techniques can also work with a semi-transparent object and may possibly be generalized to find out an object's color (although these goals would be more difficult). A variation of such imaging could someday conceivably prove valuable in medicine-for instance, as a means to image living cells. Imagine being able to x-ray someone without exposing them to many penetrating x-rays. Such imaging would therefore pose less risk to pa tients than standard x-rays. (Practically speaking, such x-ray photography is un likely to be realized, considering the difi ficulrv of obtaining optical elements for this wavelength of light.) A candidate for more immediate application is the imaging of the clouds of ultracold atoms recently produced in various laboratories.

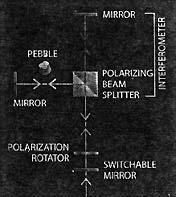

EFFICIENT MEASUREMENTS that are interaction-free combine

the setups of the quantum Zeno effect and the Elitzur-Vaidman scheme. The

photon enters below the switchable mirror and follows the optical paths

six times before being allowed to exit through the mirror. Its final polarization

will still be horizontal if there is a pebble in one light path; otherwise,

it will have rotated to a vertical polarization.

EFFICIENT MEASUREMENTS that are interaction-free combine

the setups of the quantum Zeno effect and the Elitzur-Vaidman scheme. The

photon enters below the switchable mirror and follows the optical paths

six times before being allowed to exit through the mirror. Its final polarization

will still be horizontal if there is a pebble in one light path; otherwise,

it will have rotated to a vertical polarization.

The coldest of these exhibit Bose-Einstein condensation, a new type of quantum state in which many atoms act collectively as one entity. In such a cloud every atom is so cold-that is, moving so slowly-that a single photon can knock an atom out of the cloud. Initially, no way existed to get an image of the condensate without destroying the cloud. Interaction-free measurement methods might be one way to image such a collection of atoms. Besides imaging quantum objects, interaction-free procedures could also make certain kinds of them. Namely, the techniques could extend the creation of "Schr6dinger's cat,' a much loved theoretical entity in quantum mechanics. The quantum feline is prepared so that it exists in two states at once: it is both alive and dead at the same time-a superposition of two states. Earlier this vear workers at the National Institute of Standards and Technology managed to create a preliminary kind of Schrödinger's cat-a "kitten"-with a beryllium ion. They used a combination of lasers and electromagnetic fields to make the ion exist simultaneously in two places spaced 83 nanometers aparta vast distance on the quantum scale. If such an ion were interrogated with the interaction-free methods, the interrogating photon would also be placed in a superposition. It could end up being horizontally and vertically polarized at the same time. In fact, the kind of experimental smp discussed above should be able to place a group of, say, 20 photons in the same superposition. Every photon would 'know" that it has the same polarization as all the others, but none would know its own polarizafion. They would remain in this superposition until a measurement revealed them to be all horizontally polarized or all vertically polarized. The sizable bunch of photons stuck in this peculiar condition would show that quantum effects can be manifested at the macroscopic scale. Lying beyond the scope of everyday experience, the notion of interaction-free measurements seems weird, if not downright nonsensical. Perhaps it would seem less strange if one kept in mind that quantum mechanics operates in the realm of potentialities. It is because there could have been an interaction that we can prevent one from occurring. If that does not help, take comfort in the fact that, over the years, even phvsl'cists have had a hard time accepting the strangeness of the quantum world. The underlying keys to these quanmm feats of magic-the complementary, wave-and-particle aspect of light and the narure of quantum measurements-have been known since 1930. Only recently have physicists started to apply these ideas to uncover new phenomena in quantum information processing, including the ability to see in the dark.

Rubbed out with the quantum eraser Scientific American Jan 1996

Atoms, photons and other puny particles of the quantum world have long been known to behave in ways that defy common sense. In the latest demonstration of quantum weirdness, Thomas J. Herzog, Paul G. Kwiat and others at the University of Innsbruck in Austria have verified another prediction: that one can "erase" quantum information and recover a previously lost pattern. Quantum erasure stems from the standard "two-slit" experiment. Send a laser beam through two narrow slits, and the waves emanating from each slit interfere with each other. A screen a short distance away reveals this interference as light and dark bands. Even particles such as atoms interfere in this way, for they, too, have a wave nature.

QUANTUM ERASURE relies on a special crystal, which makes pairs

of photons (red) from a laser beam

(purple) in two ways: either when the beam goes through the crystal directly

(lop) or after reflection by a

mirror (bottom). Devices that rotate polarization indicate-and can subsequently

erase-a photon's path

information.

But something strange happens when you try to determine through which slit each particle passed: the interference pattern disappears. imagine using excited atoms as interfering objects and, directly in front of each slit, having a special box that permits the atoms to travel through them. Each atom therefore has a choice of entering one of the boxes before passing through a slit. it would enter a box, drop to a lower energy state and in so doing leave behind a photon (the particle version of light). The box that contains a photon indicates the slit through which the atom passed. Obtaining this "which-way" information, however, eliminates any possibility of forming an interference pattern on the screen. The screen instead displays a random series of dots, as it sprayed by shotgun pellets. The Danish physicist Niels Bohr, a founder of quantum theory, summarized this kind of action under the term "complementarity": there is no way to have both which-way information and an interference pattern (or equivalently, to see an object's wave and particle natures simultaneously. But what if you could "erase" that telltale photon, say, by absorbing it? Would the interference pattern come back? Yes, predicted Marlan 0. Scully of the University of Texas and his co-workers in the 1980s, as long as one examines only those atoms whose photons disappeared (see "The Duality in Matter and Light," by Berthold-Georg Englert, Marlan 0. Scully and Herbert Walther; Sci. Am December 19941. Realizing quantum erasure in an experiment, however, has been difficult for many reasons (even though Scully offered a pizza for a connrincing demonstration). Excited atoms are fragile and easily destroyed. Moreover, some th orists raised certain technical objections, namely, that the release of a photon can disrupt an atom's iorward momentum (Scully argues it does not). The Innsbruck researchers sidestepped the issues by using photons rather than atoms as interfering objects. In a complicated setup, the experimenters passed a laser photon through a crystal that could produce identical photon pairs, with each part of the pair having about half the frequency of the original photon (an ultra-violet photon became two red ones). A mirror behind the crystal reflected the laser beam back through the crystal, giving it another opportunity to create photon pairs. Each photon of the pair went off in separate directions, where both were ultimately recorded by a detector. Interference comes about because of the two possible ways photon pairs can be created by the crystal: either when the laser passes directly through the crystal, or after the laser reflects off the mirror and back into it. Strategically placed mirrors reflect the photons in such a way that it is impossible to tell whether the direct or reflected laser beam created them. These two birthing possibilities are the "objects" that interfere. They correspond to the two paths that an atom traversing a double slit can take. Indeed, an interference pattern emerges at each detector. Specifically, it stems from the "phase difference" between photons at the two detectors. The phase essentially refers to slightly different travel times through the apparatus (accomplished by moving the mirrors). Photons arriving in phase at the detectors can be considered to be the bright fringes of an interference pattern; those out of phase can correspond to the dark bands. To transform their experiment into the quantum eraser, the researchers tagged one of the photons of the pair (specifically, the one created by the laser's direct passage through the crystal). That way, they knew how the photon was created, which is equivalent to knowing through which slit an atom passed. The tag consisted of a rotation in polarization, which does not affect the momentum of the photon. (In Scully's thought experiment, the tag was the photon left behind in the box by the atom.) Tagging provides which-way information, so the interference pattern disappeared, as demanded by Bohr's complementarily. The researchers then erased the tag by rotating the polarization again at a subsequent point in the tagged photon's path. When they compared the photon hits on both detectors (using a so-called coincidence counter) and correlated their arrival times and phases, they found the interference pattern had retumed. Two other, more complicated variations produced similar results. "I think the present work is beautiful," remarks Scully, who had misgivings about a previous claim of a quantum-eraser experiment performed a few years ago. More than just satisfying academic curiosity, the results could have some practical use. Quantum cryptography and quantum computing rely on the idea that a particle must exist in two states say, excited or not-simultaneously. In other words, the two states must interfere with each other. The problem is that it is hard to keep the particle in such a superposed state until needed. Quantum erasure might solve that problem by helping to maintain the integrity of the interference. "You can still lose the particle, but you can lose it in such a way that you cannot tell which of two states it was in" and thus preserve the interference pattern, Kwiat remarks. But even if quantum computing never proves practical, the researchers still get Scully's pizza. -Philip Ya

Quantum Detectives New Scientist 16 May 1998 11

IN A startling demonstration of the weirdness of quantum theory, researchers at the Los Alamos National Laboratory in New Mexico announced last week that they had detected and studied a variety of objects using laser light which never went anywhere near them. Common sense dictates that to find out anything about an object, there must be some form of interaction even if you only shine light on it. However, the quantum nature of light-in particular, its ability to behave both as a wave and a particleallows so-called interaction-free measurements to be made. In 1993, Avshalom Elitzur and Lev Vaidman of Tel Aviv University suggested that this trick could be achieved by allowing light to go along two different paths, one of which can contain the object (see diagram). When the object is absent, the setup can be arranged so that a particle-like photon of light can travel via either path with equal probability. Its wave-like properties then produce destructive interference, so the emerging photon continues along the same path and thus no signal is registered at the detector. Once an object blocks one of the paths, a photon travelling along it can't complete its journey and create the destructive interference needed to stop activation of the object detector. So any photon that chooses the path without the object can then make a choice when it reaches the beam splitter, and there is a 50 per cent chance that a signal will be detected-allowing the photon to reveal the presence of an object despite never interacting with it in any way. e Interaction-free detection has since been demonstrated in the lab, prompting phvsi cists to dream up real-life applications see "Crazy logic", 2 May, p 38).

Now Andrew White and his colleagues at Los Alamos have taken a key step towards such practit cal devices by measuring the dimensions of small objects, despite the fact that about half the photons emitted effectively never interacted with the target. In a paper in a forthcoming issue of Physical Reviews) A, the team describe how they measured the thickness of objects, which included a knife-edge, a wire and human hair, by passing them across one of the optical paths. And at an intemational conference on quantum electronics held in San Francisco last week, the team announced that a more sophis ticated system has boosted the level of non-interaction to over 70 per cent. "The next logical step for us is to use this higher-efficiency system to produce one-dimensional images of objects," said team leader Paul Kwiat. "If you can do that, you can also produce 2D images-it's not really any more difficult". According to Kwiat, that would open the way to a radically new form of imaging, in which sensitive targets-such as living cells could be examined using virtually no photons at all. Vaidman says the new work is impressive. "There are many technical difficulties t involved in such experiments," he says. "And this work is certainly helping to f make progress." Robert Matthews

Uncertainty rules in the quantum world

John Gribbin New Scientist 7 May 94